Content from Introduction to privacy enhancing technologies (PET)

Last updated on 2024-11-19 | Edit this page

Overview

Questions

- What techniques exist for analyzing data while protecting privacy?

- What are issues with only using data anonymization or pseudonymization?

- What are the building blocks to create a privacy enhancing analysis?

- What what are some non-technical challenges for performing privacy enhancing analysis?

Objectives

- Understand PET, FL, MPC, homomorphic encryption, differential privacy

- Understand how different PET techniques relate

- Understand scenarios where PET could be applied

- Understand horizontal vs vertical partitioning

- Decompose a simple analysis in a federated way

- Understand that there is paperwork to be done (DPIA etc.)

Introduction

In this course, we will explore how privacy-enhancing technologies (PETs) can support your research. On the first day, we will cover some of the core concepts of PET analysis, followed by a hands-on session where we’ll apply these techniques using the vantage6 user interface. We will also discuss how to manage PET analysis collaborations, one of the vantage6’s key distinguising features.

In the second part of the workshop, we will focus on the python client, which can do the same as the UI, but also much more. You will also learn how to create a federated algorithm using the platform’s algorithm tools.

This course provides a high-level overview of PETs, focusing on their practical applications in data analysis. While we won’t dive into the detailed mathematics behind these technologies, we encourage you to explore other resources for those specifics. Our goal here is to equip you with enough knowledge to apply PETs effectively in your work.

Problem statement

The amount of data being generated nowadays is absolutely mind-boggling. This data can be a valuable resource for researchers. However, personal data should be handled with great care and responsibility because of its sensitive nature. This is why there are privacy regulations in place like GDPR to prohibit easy access to this wealth of data.

However, often researchers are not interested in the personal records that make up the data, but rather in the insights derived from it. This raises an intriguing question: Can we unlock these valuable insights in a manner that upholds and respects privacy standards?

In classic data analysis, all data is copied over into a single place. This makes it very easy to use conventional data analysis software and tools to gain insights.

Unfortunately this way of working does not respect the privacy of the people contained within the dataset. All their personal details end up at another party.

1. Other problems with copying data

There are other issues with copying data to a central place that are not directly related to privacy. Some examples:

- The original data owner loses control of the data

- Results in multiple versions of the data

- What to do when the data needs to be updated?

- If there was consent in the first place, how can you retract consent?

1. Data sharing story time

Now it’s your turn. Have you personally experienced any issues doing research with data collected into a central place? Discuss with your peers.

In this lesson, we will discuss various privacy-enhancing technologies (PET) that can be used to analyze data in a privacy-preserving manner, and what the risks associated with different technologies are.

Data anonymization and pseudonymization

The first step in the process is often data anonymization. Personal identifiable information will in this case be removed so that individuals stay anonymous. Data pseudonimization is a similar process, but in this case, the records will be assigned an id that will make it possible to link individuals across datasets.

While data anonymization and pseudonymization are often a good first step, there is no guarantee that the data will never be reidentified. A famous example of reidentification is the story of the Netflix prize. The Netflix prize was an open competition to build the best recommender system to predict user ratings for films based on previous ratings. The data was anonymized, but in 2007 two researchers from The University of Texas at Austin were able to identify a large number of users by matching the dataset with film ratings on the Internet Movie Database (IMDB).

Federated data analysis

There are different ways in which privacy risks can be mitigated. For example, a well-known technique is to send the data to a trusted third party (TTP). The data can then be analyzed at that location in a traditional way. However, there are issues with this technique. When the data is copied to the TTP, the original owner loses control over it. Another issue with it is that this technique results in a single point of failure. If the security at the TTP is breached, all the data it handled could be exposed.

In this course we will focus on federated analysis. In a federated setting, the data with the data owner, who keeps full control over it. In this case, it is not the data that travels, but the analysis itself. The system sends a query or instruction to the data and only the results will get back to the user. The results are often akin to a form of aggregation of the data. This can be in the shape of traditional statistics like the mean, or it can be more intricate like a machine learning model. When data is aggregated, it doesn’t have a direct link with an individual anymore. Since the aggregated data cannot be be traced back to individuals individuals directly, the privacy is considered protected.

Aggregating the data does not ensure complete protection of person-level information, but it certainly makes it less likely that this will happen. It is usually the case that the larger the dataset, the lower the risk of data leakage. For instance, if you would like to take the mean of all records that fit a certain requirement, like age > 90, and there happens to be only one records that fits the requirement, the aggregation will be equal to the one matching individual record.

Federated learning

The term federated learning was introduced in 2016 by researchers at Google (McMahan et al.) and refers to a “loose federation of participating devices (which we refer to as clients) which are coordinated by a central server.” In traditional federated learning, the clients train machine learning models, and only the updates of the models are sent back to the central server. The central server combines the updates from all the individual clients into one final machine learning model.

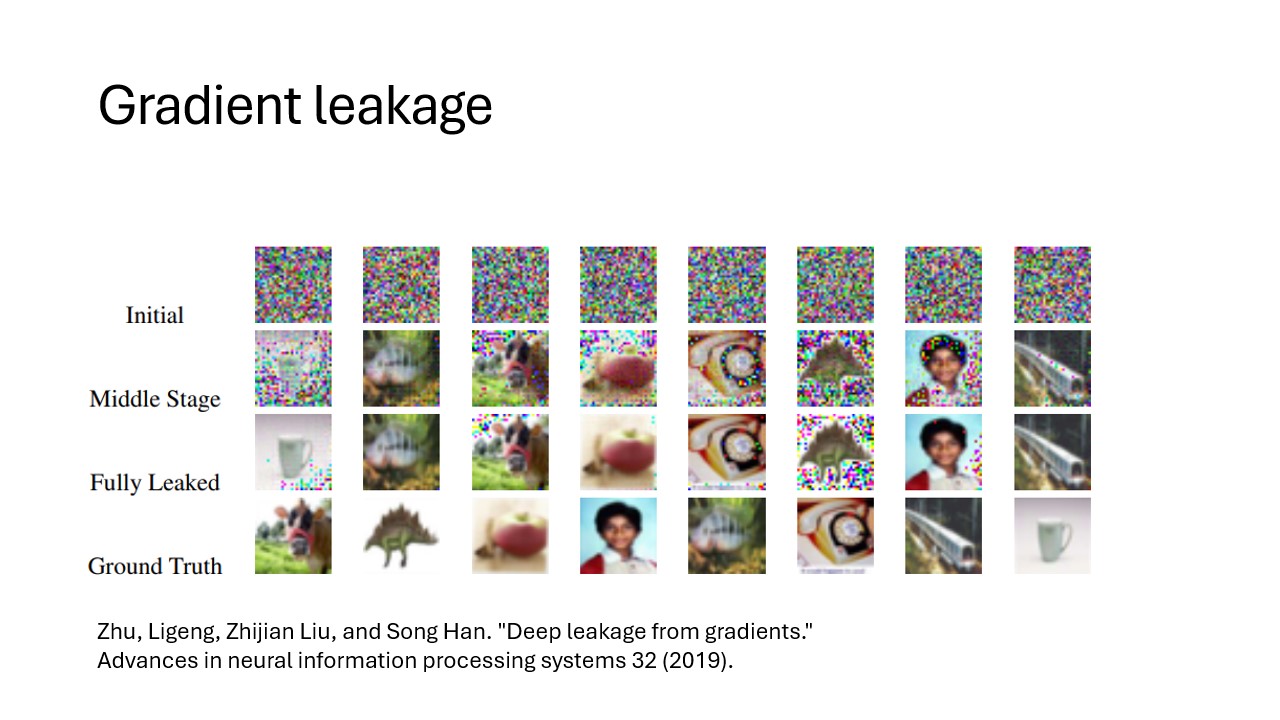

There are caveats to using this type of data analysis though. Although the data transmitted from the clients to the server are an aggregation of the raw data, researchers have found a way to use this data to reconstruct the original data. This vulnerability is called gradient leakage.

Secure Multiparty Computation

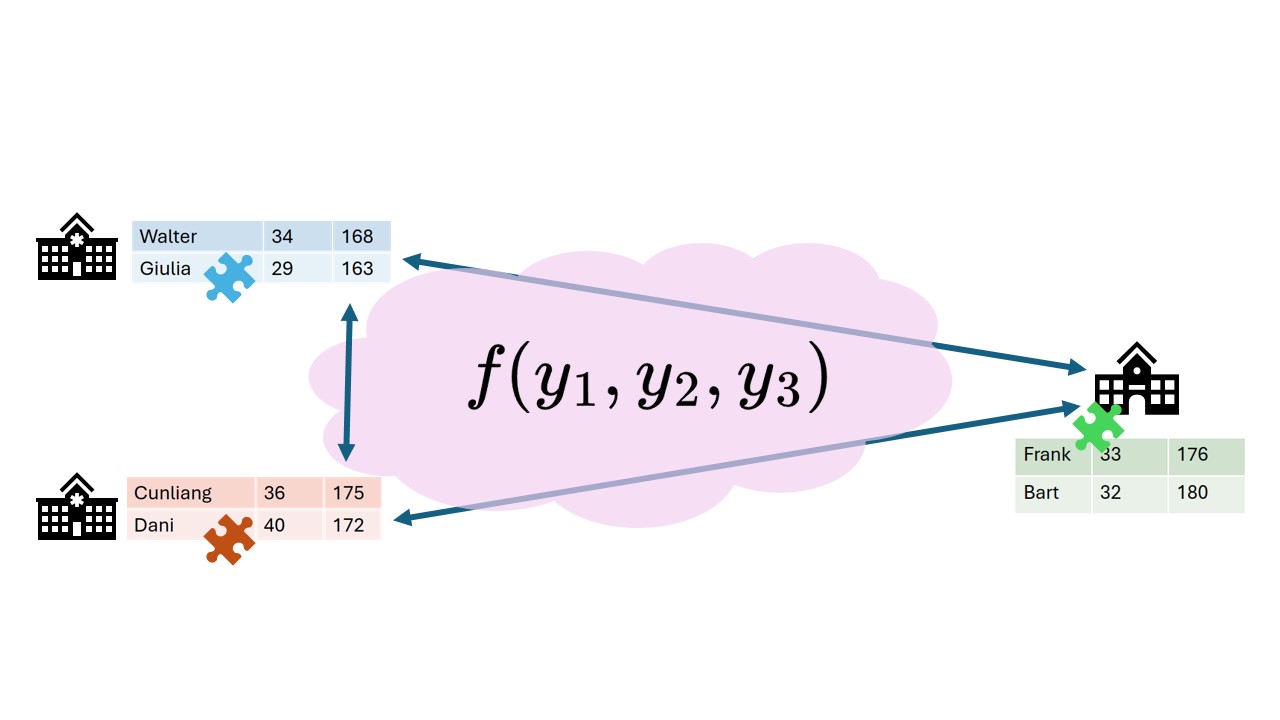

There are different solutions to prevent the reconstruction of raw data. One solution is to make sure that no party other than the data owner is actually able to see the intermediate data. One branch of techniques that can be used for this is Secure Multiparty Computation (MPC). With MPC, computations are performed collaboratively by multiple parties. Data is encrypted in such a way that other parties cannot see the original values, but values of multiple parties can still be combined ( e.g. added or multiplied). A classic technique from the field of MPC is secret sharing. With this technique data is encrypted, after which pieces of the encryption are sent to the other parties. No single party will be able to reconstruct the original value. Only when a certain minimum of parties work together (n-1 in many cases) the original value can be retrieved.

When combining multiple values using secret sharing, this will result in the parties owning new puzzle pieces that when put together will reveal the result of the computation.

Secret sharing, an example

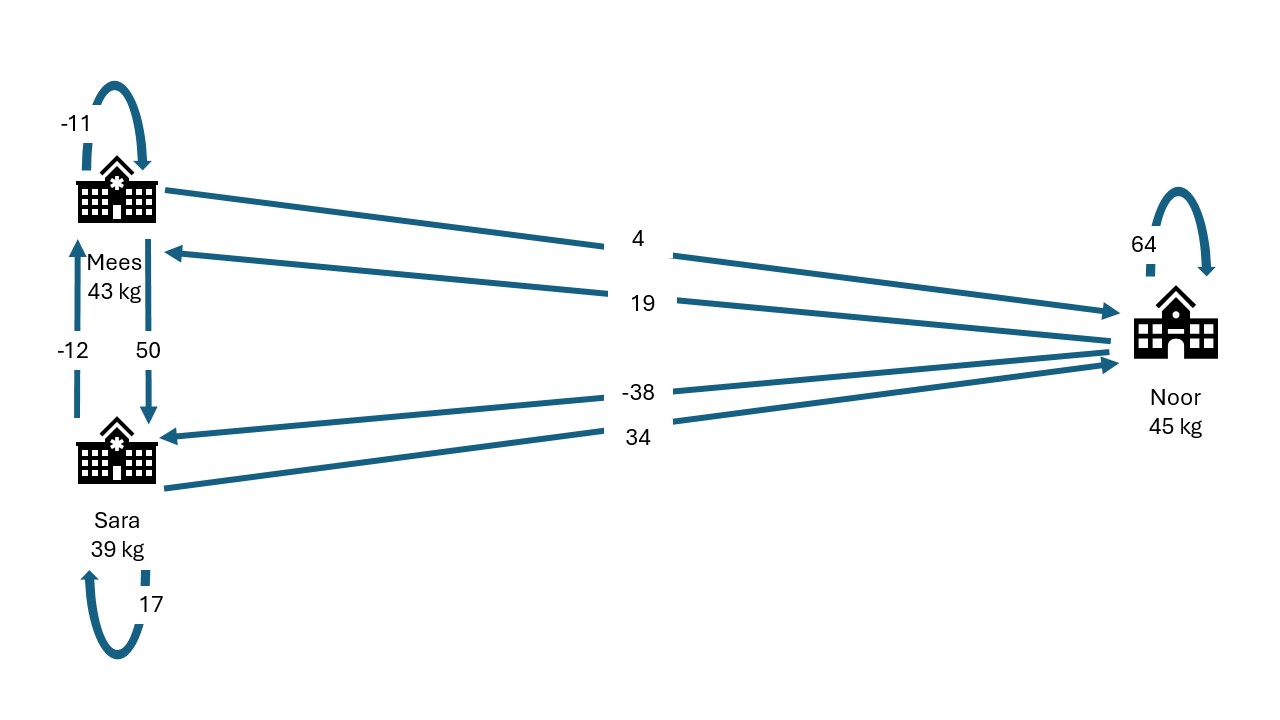

Mees, Sara and Noor want to know how much they weigh in total. Mees weighs 43 kg, Sara weighs 39, Noor weighs 45. All three they think of 2 random numbers \(r_1\) and \(r_2\) so that \(weight = r_1 + r_2 + x\). Finally they compute \(x\) by \(x=weight - r_1 - r_2\). These random numbers can be seen as encrypted data.

After computing the secret shares, they distribute these “cryptographical puzzle pieces” among their peers.

| Mees receives | Sara receives | Noor receives | Sum | |

|---|---|---|---|---|

| Mees generates: | -11 | 50 | 4 | 43 |

| Sara generates: | -12 | 17 | 34 | 39 |

| Noor generates: | 19 | -38 | 64 | 45 |

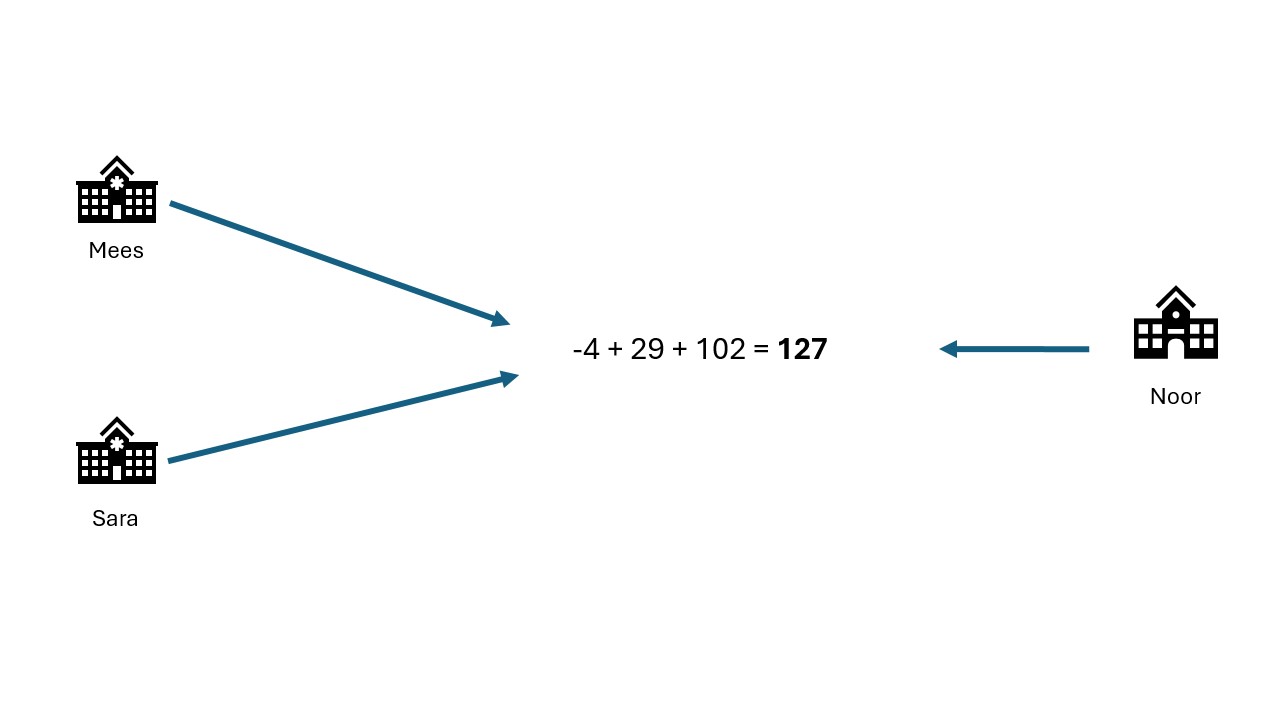

They sum their shares:

| Mees | -4 |

| Sara | 29 |

| Noor | 102 |

They add their sums together: -4 + 29 + 102 = 127 In this way, they have aggregated their data without sharing their individual data with anyone else.

Oh no! A hacker!

A hacker manages to get access to Mees’ computer that contains his data and the secret shares he received. He also knows the result of the aggregation. Will the secrecy of the three weights still be preserved? What if the hacker gets access to Sara’s computer as well? Will Noors privacy be protected?

If the hacker has access to the result, he can reconstruct Noors weight by subtracting Mees’ and Sara’s weight from the total. The secret shares are not adding any more relevant information.

3. Aggregation preserves privacy?

Consider the dataset with the 3 childrens weights again. The only unencrypted data everybody receives, is the result. Consider the situation where Sara knows Mees very well, and might know approximately how much he weighs. Do you think the privacy of the three individuals is properly preserved?

When receiving a sum from a set of 3 individuals, every record makes up roughly 30% of the final answer. That is a big contribution. It can be quite easy to reconstruct the individual records, for example by crossreferencing with other datasets. Also, if you are able to get your hands on 2 of the datapoints, you will be able to fully reconstruct the third one.

Usually there are guard rails in place when performing PET analysis to reject situations where there are very few datapoints, because the original data is too easy to reconstruct.

Trust is another factor here. If the different parties (Mees, Sara and Noor) have a high level of trust in eachother not to share their data, the risk level is lower than when the parties are known to occasionally leak their data, or don’t have proper security set in place.

Differential privacy

As mentioned before, aggregation of data will not always prevent leaks of sensitive information. Consider the example of Mees, Sara and Noor. We know their total weight is 127 kg. If Sara and Noor get together and subtract their weights off of the total, they will be able to infer how much Mees weighs.

An aggregation is fully differentially private when someone cannot infer whether a particular individual was used in the computation. In the field of differential privacy there are different techniques for adapting analyses to be more differentially private. Often these techniques involve adding noise to either the raw data or the result of the analysis. This makes the result less precise, but with the added benefit that it will be more difficult to infer the original data.

The figure below shows a differential privacy technique where a random subset of the data is replaced with random values. This gives an individual plausible deniability regarding whether they were part of the original dataset. Their data might have been used, or it might have been replaced with noise.

Blocks upon blocks

The previously mentioned techniques are not used in isolation, but are usually stacked on top of eachother to mitigate the privacy risks that are relevant within the usecase. Typically, the process begins by anonymizing or pseudonymizing the data. With vantage6, the data is then placed in a federated setting. Then, the data is analyzed using federated learning, which may also include, for instance, MPC protocols to further safeguard data privacy. Additionally, noise may be added to the raw data as well before it is analyzed, using techniques from differential privacy.

Data partitioning

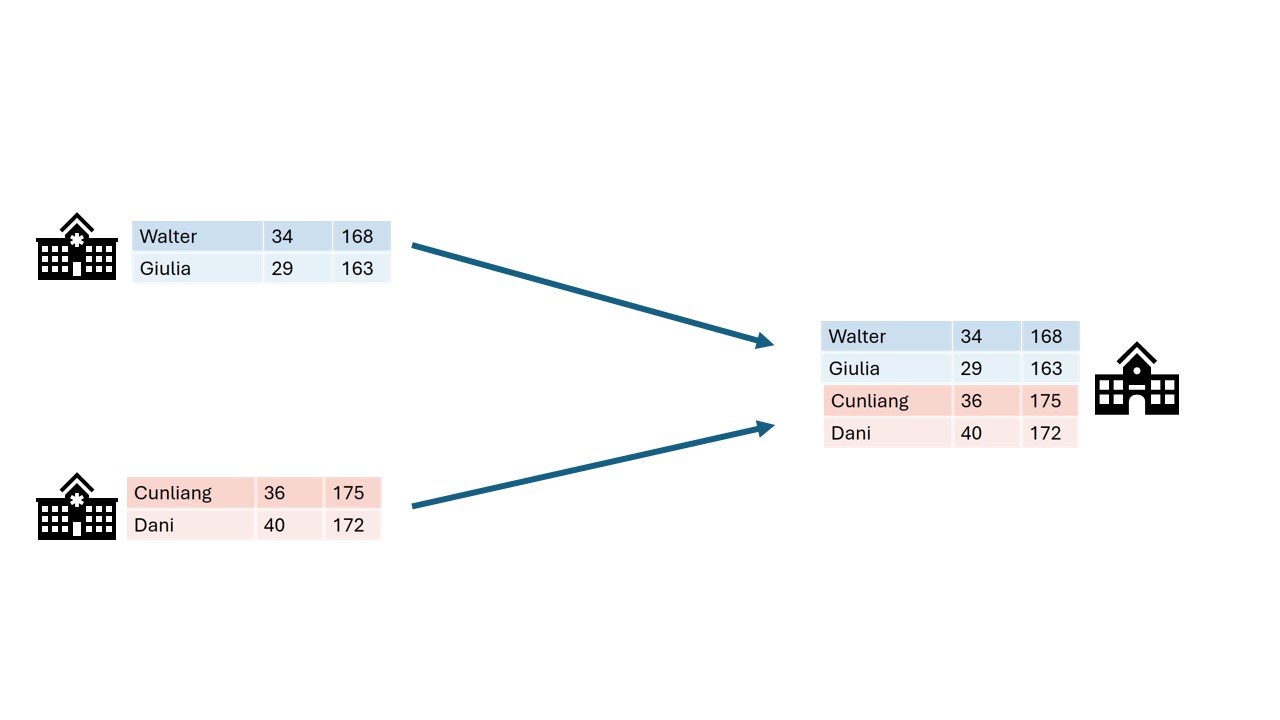

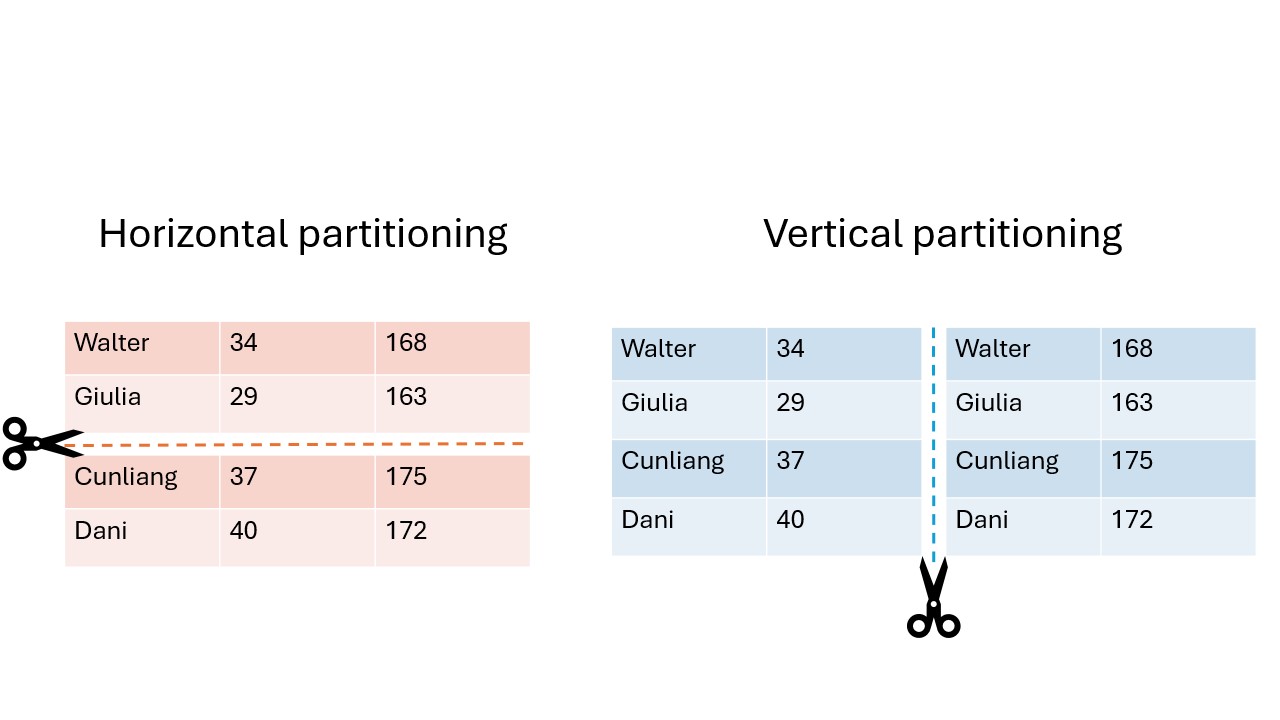

Data sharing challenges come in many different shapes and sizes, but in the end, the goal of the researchers is often to analyze data as if it were available in one big table in one place. There are 2 main ways in which the dataset can be separated over different sources: horizontal and vertical partioning.

In horizontal partitioning, this giant table has been snipped in pieces by making horizontal cuts. The result is that information of an individual record will stay in one place, but the records themselves have been scattered around in different locations. A common example of horizontal partitioning is when different hospitals have the same data on different patients.

In vertical partitioning, the cuts have been made vertically. Columns have now been divided over different locations. For example, this would be the case if a hospital has data on a set of patients and the GP has different information for the same set of patients. This type of partitioning is usually more challenging because often a way needs to be found to link identities across datasources. Vertical partitioning requires different types of privacy enhancing algorithms than horizontal partitioning.

Data can even be horizontally and vertically partitioned at the same time. In these cases, it may be necessary to combine multiple techniques. For example, you might want to combine census data with medical records from hospitals. This is vertically partitioned because the census and medical features are stored in different places. On top of that, you might want to combine multiple hospitals, that all store their records separately. Since the medical records are physically separated as well, it is horizontally partitioned.

Technology doesn’t solve everything

You have now learned about various technologies for analyzing data while preserving privacy of individuals. However, it should be emphasized that these technologies do not solve all your data sharing problems. Rather, they are only a small piece of the puzzle. In research projects involving privacy enhancing technologies, a lot of work goes into complying with regulations and building trust.

Since these projects have a risk of affecting the privacy of individuals, a Data Protection Impact Assessment (DPIA) is usually required. This is a process that will help identify and minimize privacy risks of a project and is required by the GDPR. There is already a DPIA available for vantage6

Apart from procedures required by GDPR there might be other regulations in place enforced by the owners of the data (e.g. hospitals). For healthcare specifically there will be the European Health Data Space (EHDS). EHDS builds upon the GDPR and ensures the exchange and reuse of healthcare data in a safe and secure way.

The specific situation of a project can affect the way in which the data is allowed to be processed. Some privacy enhancing technologies might be allowed in one project but prohibited in another. It is always important to stay transparent about privacy risks of the technologies you intend to use.

Another factor in performing PET analysis is data harmonization. All parties need to make sure that their data is in the right shape to be combined. For instance, if you have two datasets where one stores the height of patients in a column “height” in centimeters, and another dataset where it is stored in meters in the column “patient_height” you cannot perform your analysis. You will need to make sure all datasets follow the same standards and schemas in order to process them together. There are a couple of datastandards out there to help you with that, such as OMOP CDM or FHIR together with SNOMED-CT.

In conclusion, PETs will help you to extract valuable insights from sensitive data, but they are only one aspect of the process. It is also important to consider other factors such as regulations and data preprocessing.

- Privacy enhancing analysis can derive insights from data without seeing individual records.

- Privacy enhancing analysis usually starts with the anonymization or pseudonymization of the data.

- In federated data analysis the analysis moves to the data, while in classic analysis the data moves around.

- In secure multiparty computation, computations are performed collaboratively without any one party being able to see all the raw data.

- Techniques from differential privacy add noise to the data to make it harder to reconstruct the original records from an aggregation.

- Privacy enhancing analyses usually stack multiple techniques on top of each other to provide multiple layers of protection.

- Horizontal partitioning means the records are split, while in vertical partitioning the features are split.

- Technology is only one part of the story, when doing research on privacy sensitive data.

Content from vantage6 basics

Last updated on 2024-10-03 | Edit this page

Overview

Questions

- Why should I use vantage6?

- How does vantage6 work?

- How do federated algorithms run in vantage6?

- What will be available in vantage6 in the future?

Objectives

- List the high-level infrastructure components of vantage6 (server, client, node)

- Understand the added value of vantage6

- Understand that there are different actors in the vantage6 network

- Understand that the vantage6 server does not run algorithms

- Explain how a simple analysis runs on vantage6

- Understand the future of vantage6 (policies, etc.)

Why choose vantage6

vantage6 is a platform to execute privacy enhancing techniques (PETs). Several alternative platforms for PETs are available, but vantage6 is unique as it provides:

- Open source and free to use under Apache-2.0 licence.

- Container orchestration for privacy enhancing techniques.

- Easily extensible to different types of data sources.

- Algorithms can be developed in any language.

- A Graphical User Interface is provided to operate the platform.

- Other applications can connect to vantage6 using the API.

- Managing and enforcing collaboration policies

- Minimal network requirements at data stations

Project administration in vantage6

vantage6 encompasses a project administration system that allows the user to manage permissions and access to the resources, while assuring the protection of the data. The fundamental concepts of the administration system are defined as follows:

- An Organization is a group of users that share a common goal or interest (e.g., a consortium, an institute, etc.).

- A Collaboration involves one or more organizations working together towards a shared objective.

- A Node is a vantage6 component with access to the organization data, which is capable of executes algorithms on it. It represents the organization’s contributions to the network.

- A Task is a request for the execution of a given analysis algorithm on one or more organizations within a collaboration. These execution requests are handled by the corresponding organizations’ nodes.

- A user is a person that belongs to one organization who can create tasks for one or more organizations within a collaboration.

- An algorithm is a computational model or process -that adhere to the vantage6 framework-, which can be securely distributed to nodes for execution on the corresponding organization’s data.

- An algorithm store is a centralized platform for managing pre-registered algorithms. This serves as an alternative to using algorithms from unknown authors or those lacking transparency regarding their development process and status.

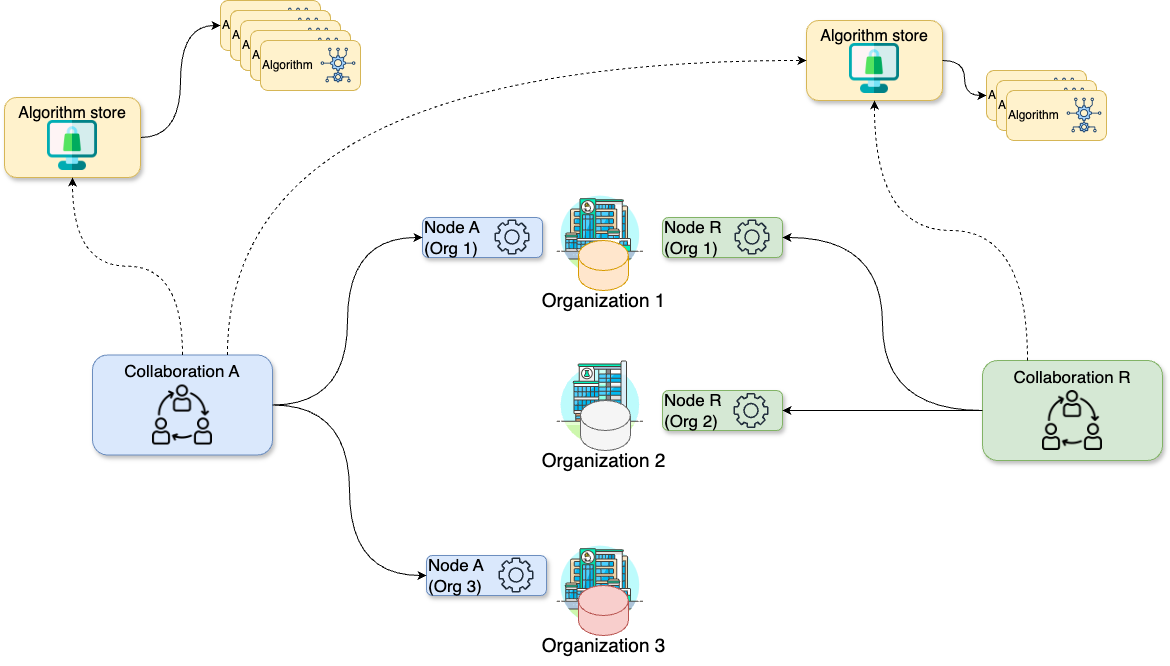

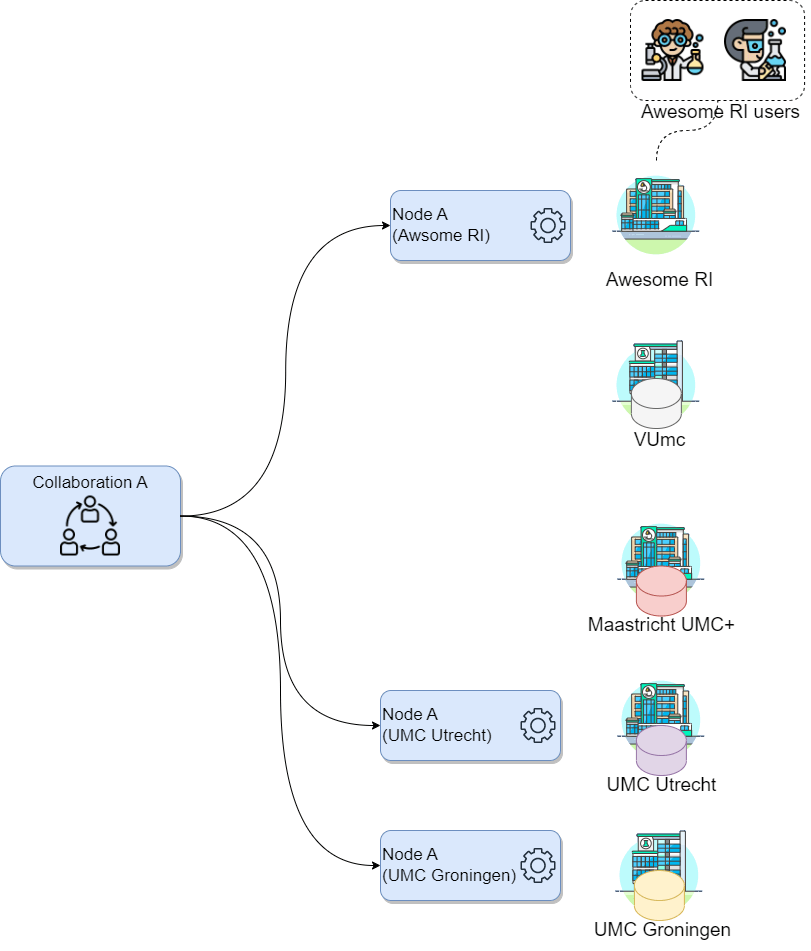

The diagram below illustrates a collaboration between two

organizations. In this scenario, users from Organization 1

and Organization 3 — with the appropriate credentials — can

request the execution of tasks within Collaboration

A. In this case, a user from Organization 1 might

request the execution of an algorithm (previously

registered in an algorithm store trusted by the

collaboration) across all participating organization nodes. In response,

each node from the involved organizations executes the

algorithm on its local data. The resulting (aggregated)

data is then sent back to the server, where it can be accessed by the

requesting user. To enhance the security of the communication, messages

between organizations can be encrypted. In this case, an organization

can have a public key that the other collaborating organizations have to

use in order to exchange messages.

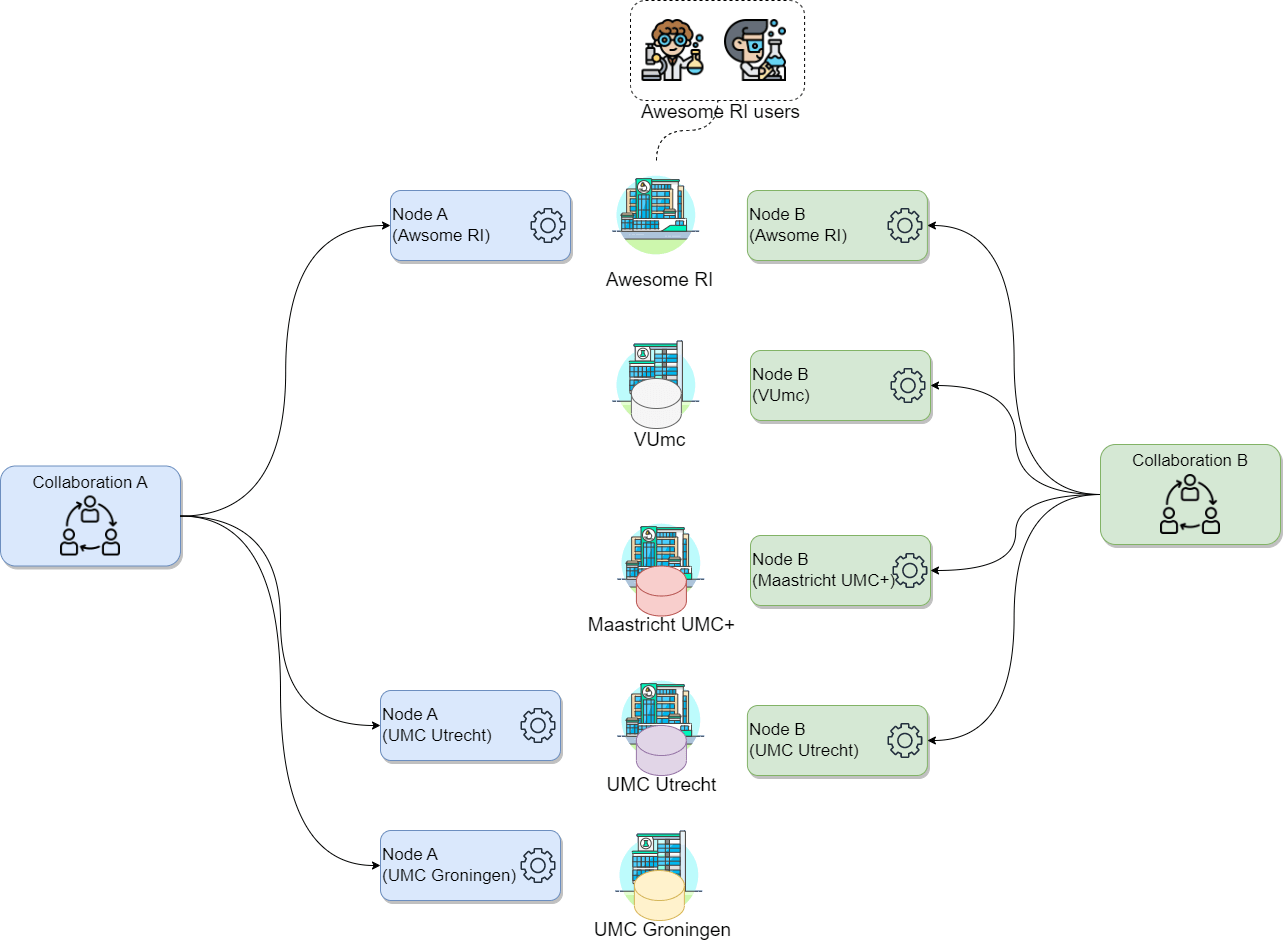

The following diagram expand the previous scenario further: what if

Organization 1 needs to participate on an additional

collaboration with another

organization (e.g., Organization 2)? In

this case, Organization 1 will have two running nodes, one

for each collaboration. Moreover, as also depicted on the diagram below,

each collaboration can make use of one or more

algorithm stores:

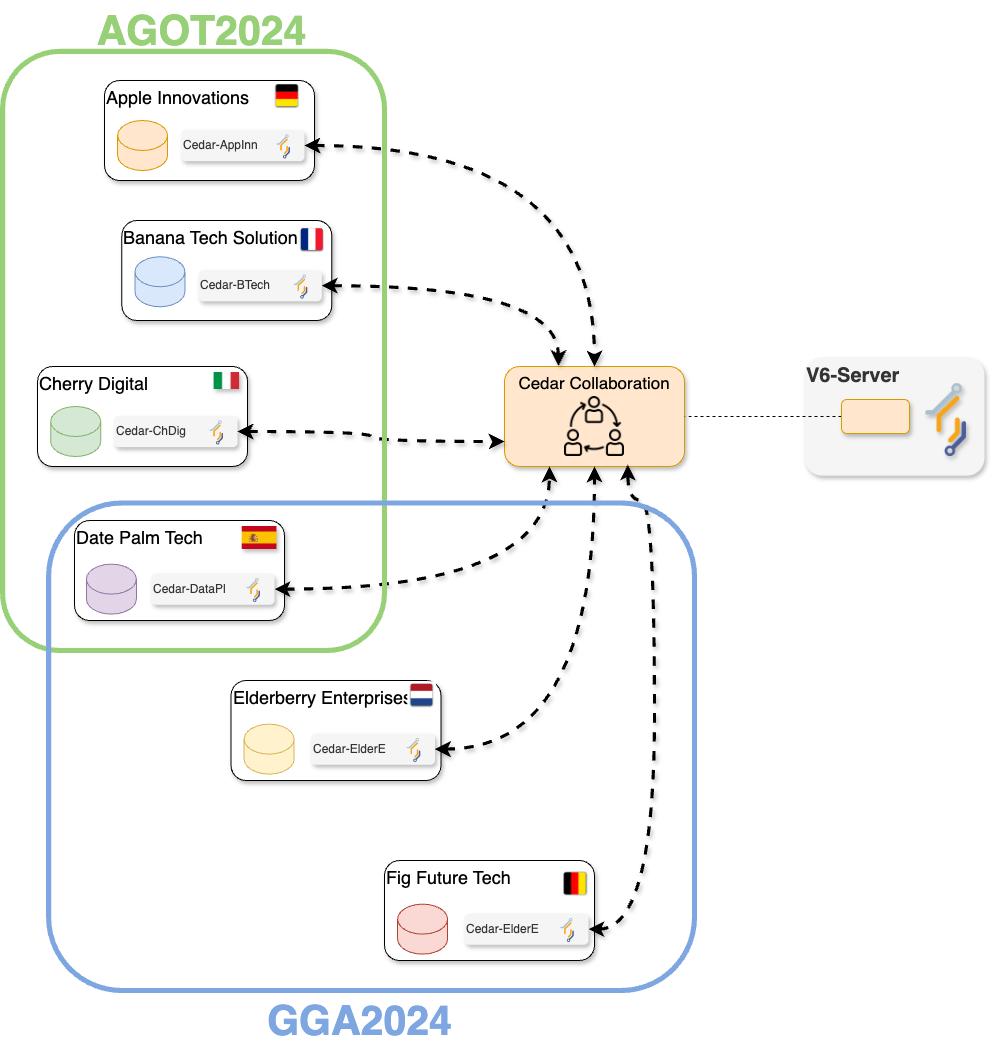

Finally, the concept of study is an important one when using vantage6 for data analysis. A study represents a subset of organizations within a given collaboration that are engaged in a specific research question. By setting up studies, you can more efficiently assign tasks to a specific group of organizations within a collaboration and better manage the results of these analyses.

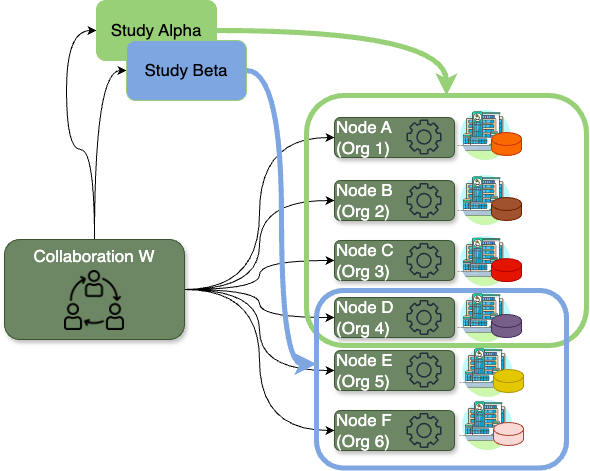

For example, consider the Collaboration W below, which

includes six organizations. This collaboration might involve two

distinct research questions: one that requires data from organizations

1, 2, 3, and 4, and another that focuses on data from organizations 4,

5, and 6. By establishing Study Alpha and

Study Beta, you, as a researcher, can target your data

analysis tasks in three different ways: you can address the entire

Collaboration W (including nodes A to

F), focus on Study Alpha (nodes A

to D), or concentrate on Study Beta (nodes

D to F).

Challenge 1: Mapping vantage6 to “real life”

Let’s consider a scenario where you, on behalf of your research institute, want to conduct a new study on a particular illness across three major academic hospitals in the Netherlands: VUmc in Amsterdam, Maastricht UMC+, and UMC Utrecht, as these have valuable data related to the illness. Consider the following:

- Your research institute has an existing collaboration (with a different purpose, not related with yours) with UMC Utrecht and UMC Groningen. Hence, there is a vantage6 node already running on your institution for the said collaboration.

- You will be conducting this study with a colleague from your institute named Daphne. Both of you are already registered on the organization but without access to the existing collaborations.

How would the concepts described above map to your potential use case?

- Which organizations will you need to add to your collaboration?

- How many new nodes would you need to set up and on which organizations?

- How many users would be created?

- In this case the organizations would be the academic hospitals as well as your own organization: VUmc, Maastricht UMC+, UMC Utrecht and your research institute. Note that UMC Utrecht must be added to the new collaboration despite being already part of an existing one.

- One node for every organization in the collaboration, so 4. Note that UMC Utrecht needs a new node despite already having one, as the existing one is for a different collaboration.

- There is no need to create new users, as these are already registered on the organization. Note that the users are linked only to the organization, not to the nodes.

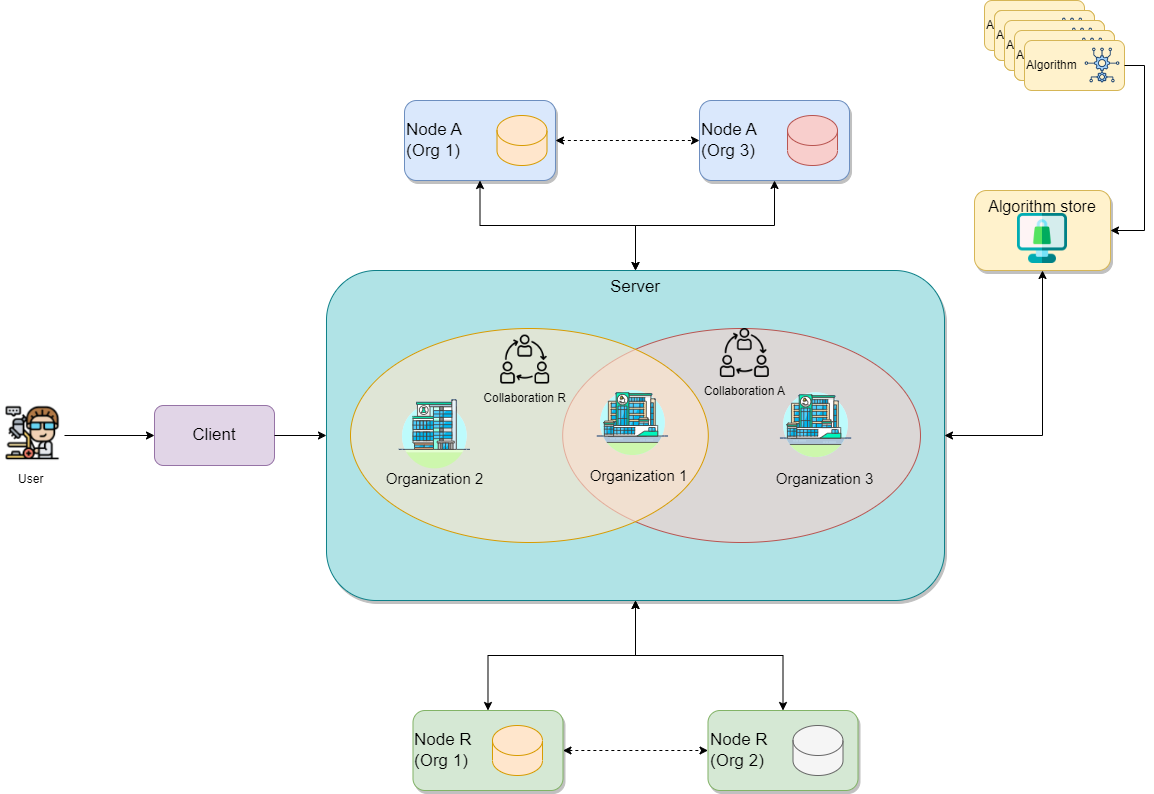

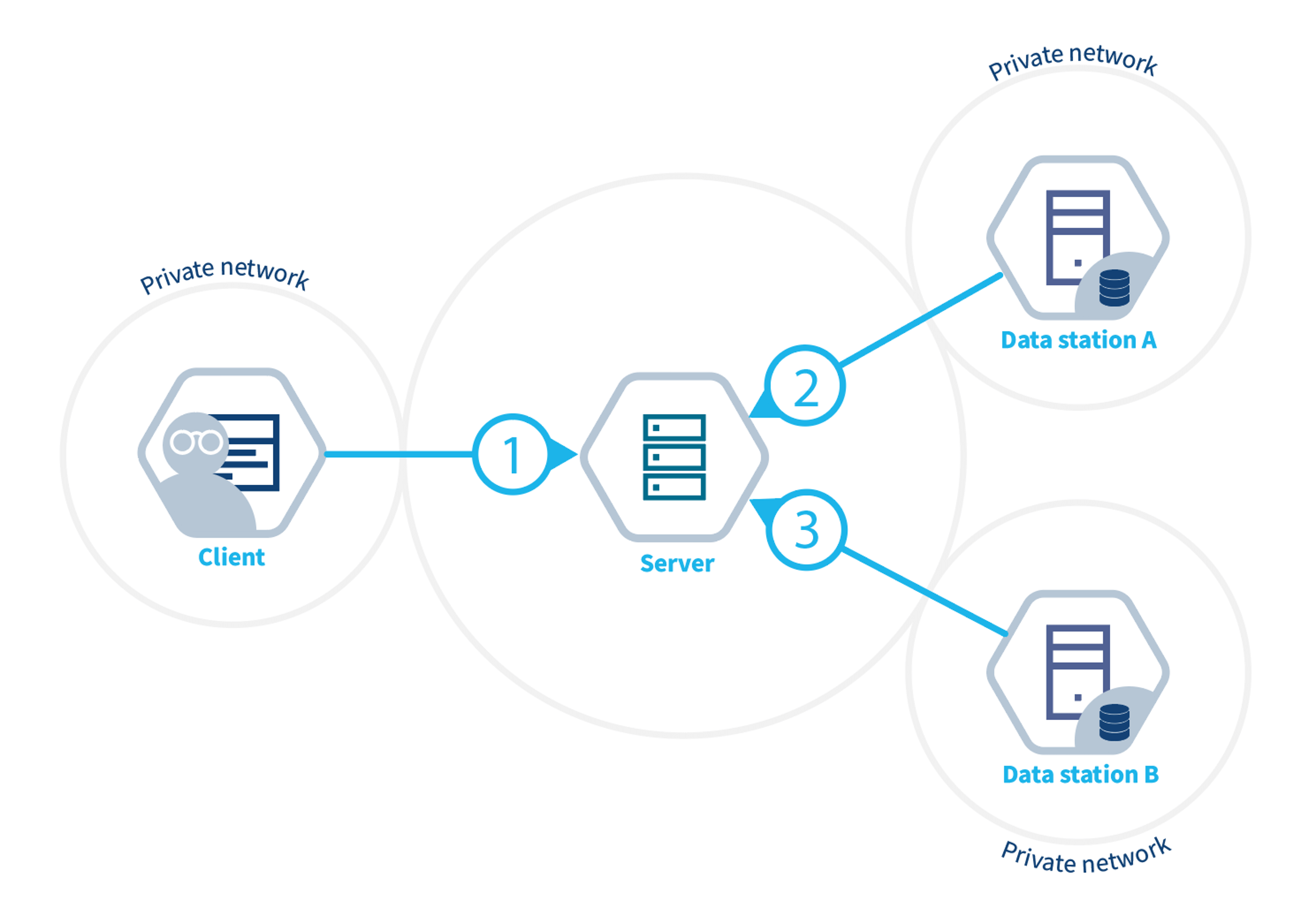

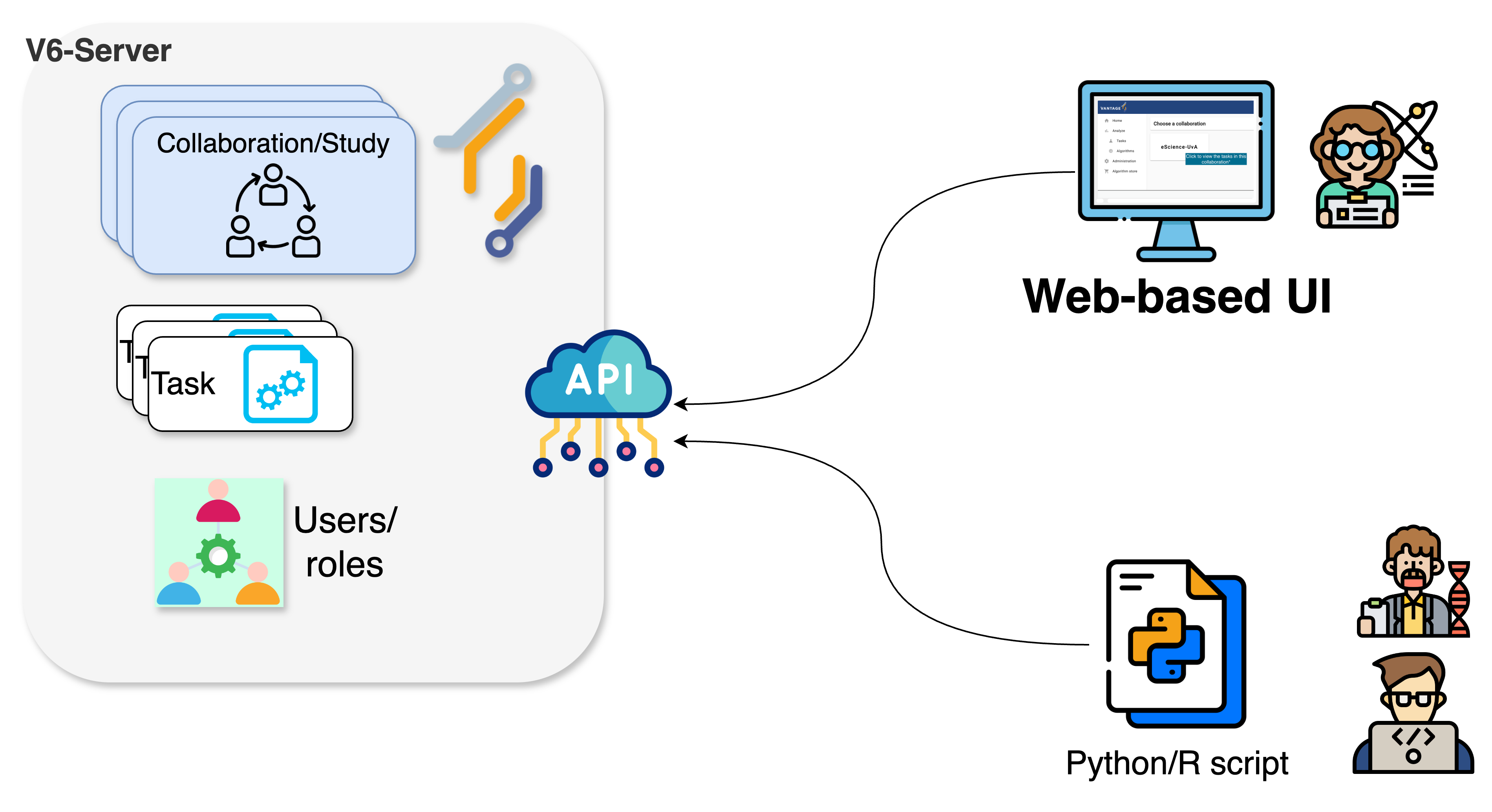

The vantage6 infrastructure

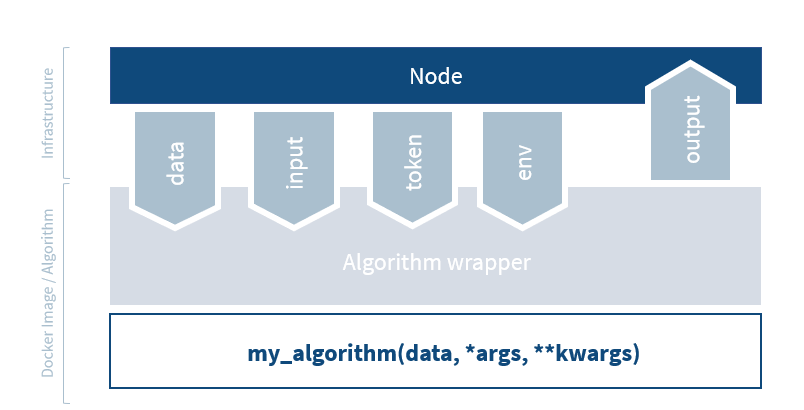

Now that we have an overview of how vantage6 manages the project resources and how it can be used to setup the analysis framework, we can see how vantage6 works on a technical level and how the infrastructure maps the aforementioned concepts. In vantage6, a user can pose a question through a client to the vantage6 server. Each organization with sensitive data contributes one node to the network. The nodes collect the computation request from the server and fetches the algorithm from the algorithm store to answer it. When the algorithm completes, the node sends the aggregated results back to the server.

On a technical level, vantage6 may be seen as a container orchestration tool for privacy preserving analyses. It deploys a network of containerized applications that together ensure insights can be exchanged without sharing record-level data.

Let’s explain in some more detail what these network actors are responsible for, and which subcomponents they contain.

Server

The vantage6 server acts as communication hub between clients and nodes. The server tracks the status of the computation requests and handles administrative functions such as authentication and authorization. It consists of multiple applications:

Vantage6 server: Contains the users, organizations, collaborations, tasks and their results. It handles authentication and authorization to the system and acts as the communication hub for clients and nodes.

Docker registry: Contains algorithms stored in container images which can be used by clients to request a computation. The node will retrieve the algorithm from this registry and execute it. It is possible to use public registries for this purpose like Docker hub or Github Containers. However it is also possible to host your own registry, for example a Harbor instance.

Data Station

The data station hosts the local data and the node (vantage6-node). The Vantage6 node is responsible for executing the algorithms on the local data. It protects the data by allowing only specified algorithms to be executed after verifying their origin. The node is responsible for picking up the task and running them in parallel, executing the algorithm and sending the results back to the server. For more details see the technical documentation of the node.

Client

A user or application who interacts with the vantage6-server. They create tasks, retrieve their results, or manage entities at the server (i.e. creating or editing users, organizations and collaborations).

The vantage6 server is an API, which means that there are many ways to interact with it programmatically. There are however a number of applications available that make is easier for users to interact with the vantage6 server:

User interface: The user interface is a web application (hosted at the server) that allows users to interact with the server. It is used to create and manage organizations, collaborations, users, tasks and algorithms. It also allows users to view and download the results of tasks. Use of the user interface recommended for ease of use.

Python client: The vantage6 python client

is a Python package that allows users to interact with the server from a Python environment. This is especially usefull for data scientists who want to integrate vantage6 into their workflow.

Algorithm store

While a vantage6-supported research infrastructure offers a strong defense against many data privacy risks, there remains one crucial security aspect that falls outside the platform’s scope: the validation of the code that will run on this infrastructure. For instance, the administrators of the nodes running within each organization are responsible for defining which algorithms (i.e., which container images) will be allowed for execution on the respective collaborations. As this is a critical and complex task that entails activities like code analysis and verification, working with algorithms from trusted sources is the primary line of defense against potential threats.

Vantage6’s algorithm store is a repository for trusted algorithms within a certain project that aims to enhance trustworthiness by offering a centralized platform for managing pre-registered algorithms. This serves as an alternative to using algorithms from unknown authors or those lacking transparency regarding their development process and status. The algorithm store currently allows researchers to explore which algorithms are available and how to run them. This, streamlines task execution requests within collaborations. Also, the algorithm store integrates additional information to the algorithm metadata such who developed and reviewed the algorithm. Only after complying with the review policies of a store, a new algorithm will be published in the store.

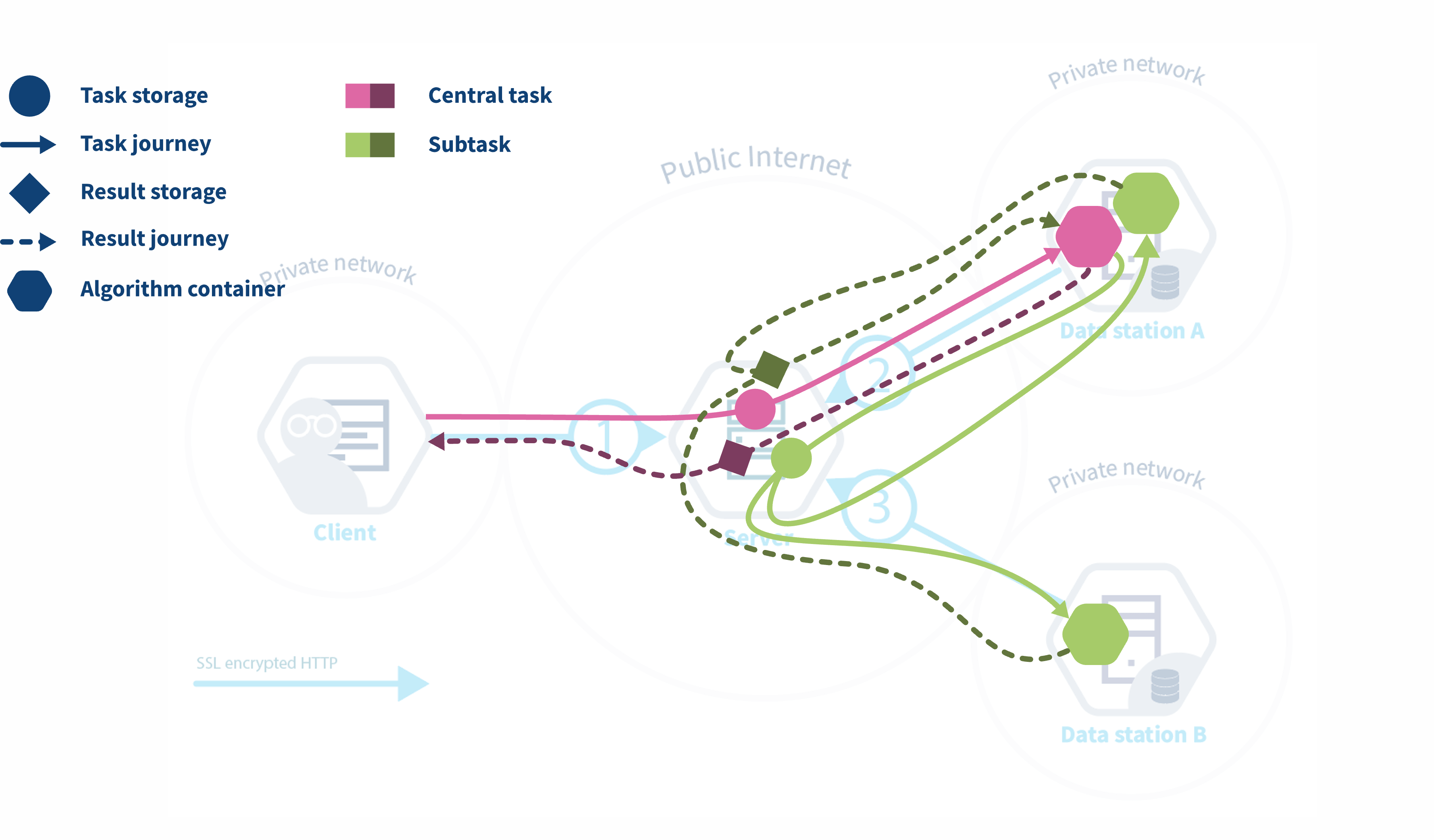

The workflow of a task running in vantage6

The diagram below illustrates what happens when a request is sent by a user to vantage6. In this scenario, a user — with the appropriate credentials — can request the execution of tasks within using the UI. In this case, the user might request the execution of an algorithm (previously registered in an algorithm store trusted by the collaboration) across all participating organization nodes. In response, each node from the involved organizations executes the algorithm on its local data. The resulting (aggregated) data is then sent back to the server, where it can be accessed by the requesting user. To enhance the security of the communication, messages between organizations can be encrypted. In this case, an organization can have a public key that the other collaborating organizations have to use in order to exchange messages.

How algorithms run in vantage6

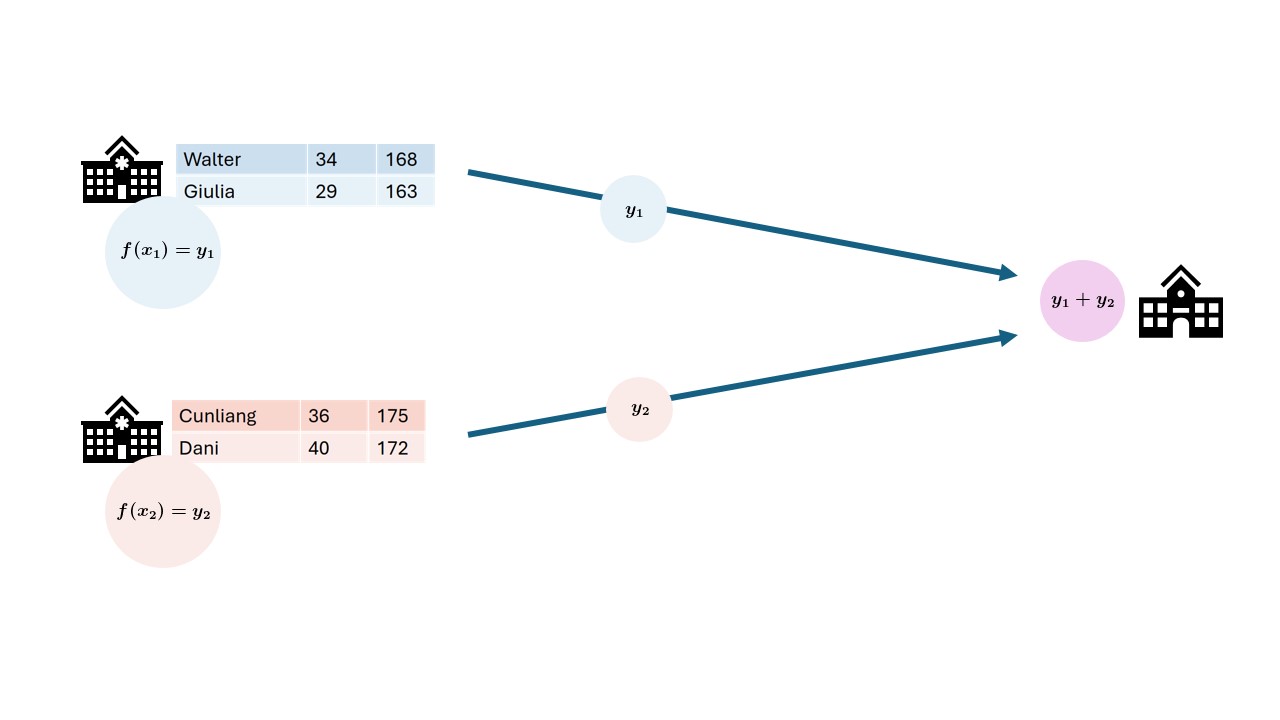

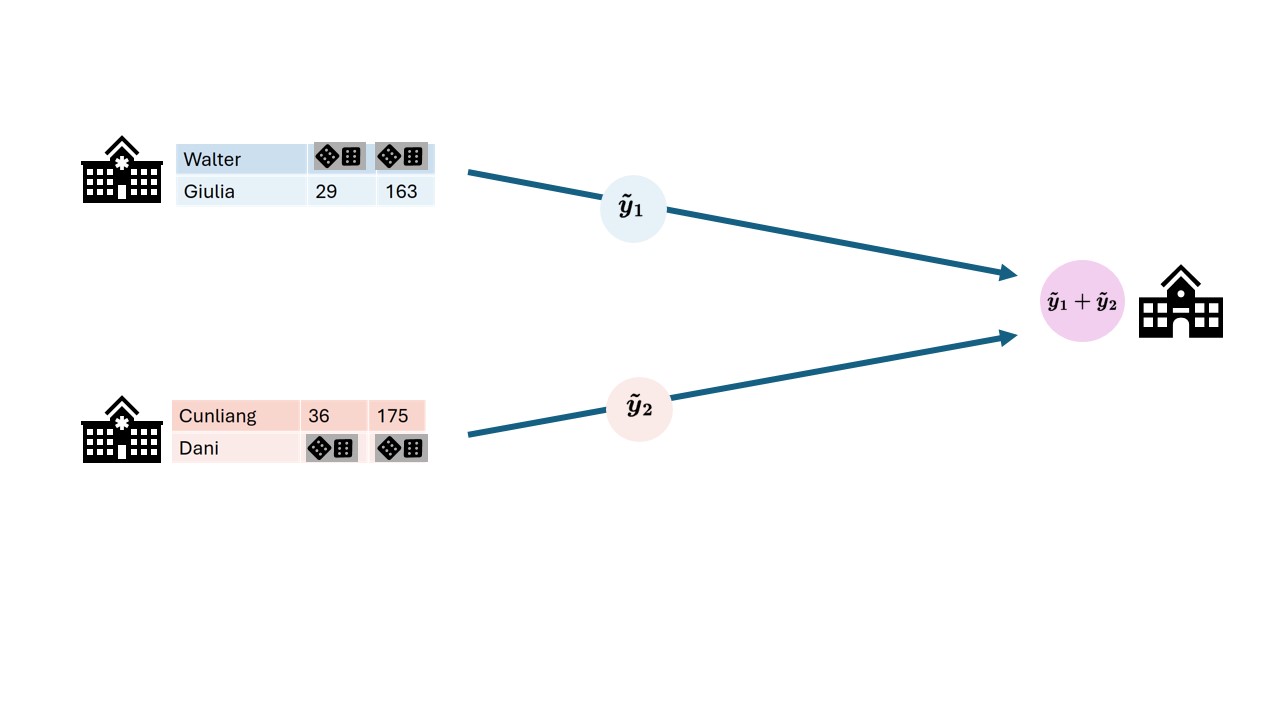

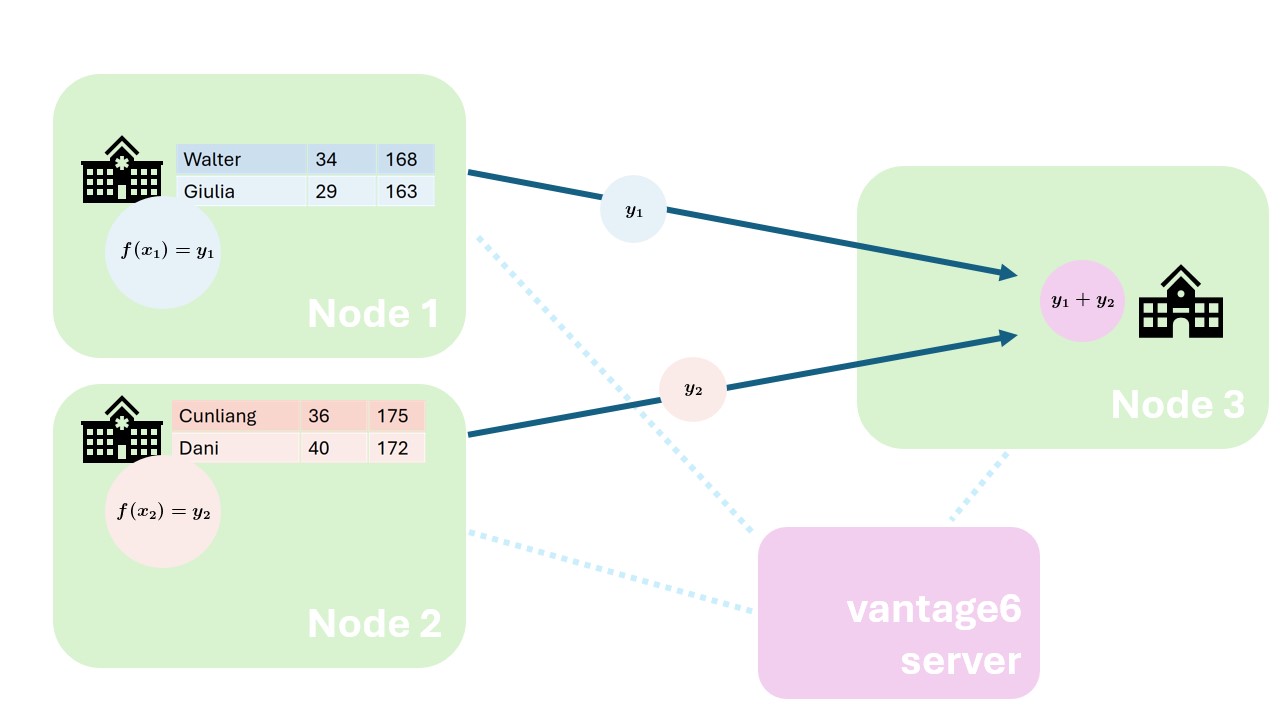

So how does vantage6 relate to the privacy enhancing techniques that we discussed in chapter 1? Let us consider the federated sum from chapter 1 again

As you can see, both the datasources and the aggregation entity are mapped to nodes. The vantage6 server is on the side, coordinating the analysis.

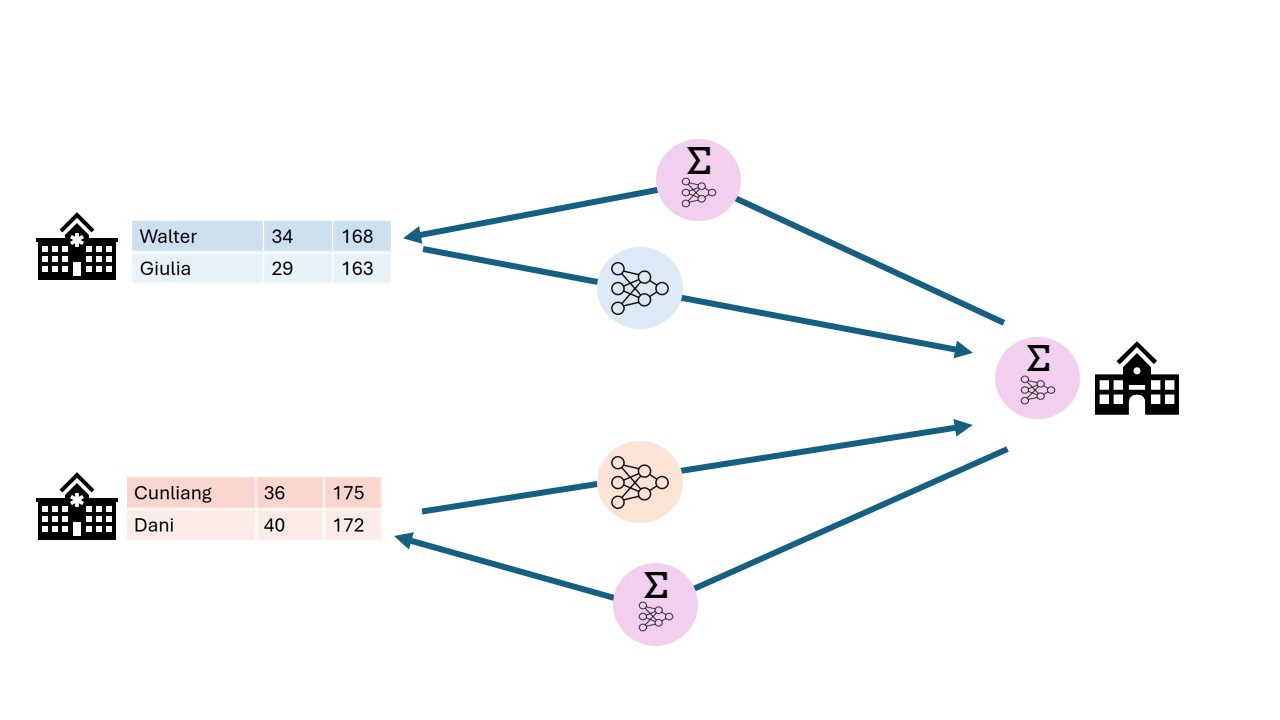

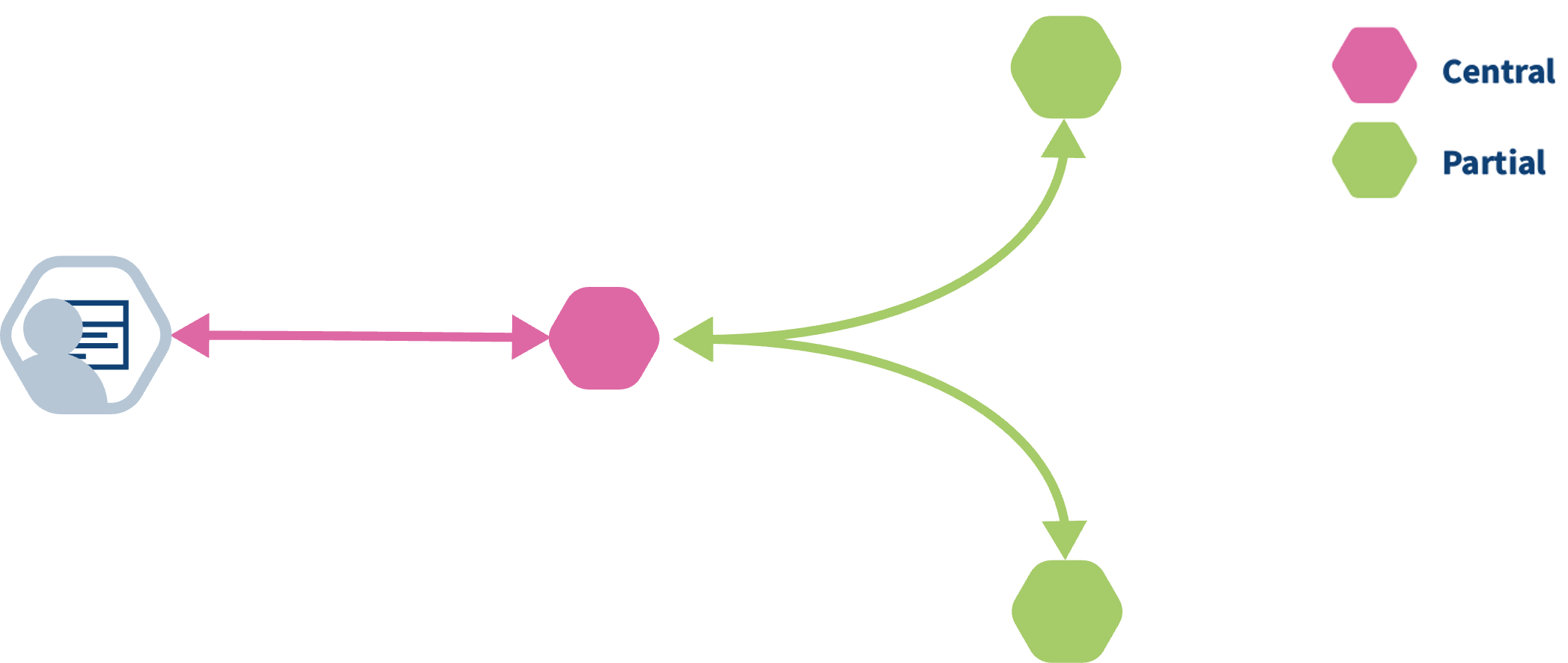

Federated algorithms can be split in a federated and a central part:

Central: The central part of the algorithm is responsible for orchestration and aggregation of the partial results. In our example this would be the aggregation.

Federated: The partial tasks are executing computations on the local privacy sensitive data. These would be the nodes on the left.

Now, let’s see what typically happens if a task is created in vantage6:

- The user creates a task for the central part of the algorithm. This is registered at the server.

- The task registration leads to the creation of a central algorithm container on one of the nodes.

- The central algorithm creates subtasks for the federated parts of the algorithm, which again are registered at the server.

- All nodes for which the subtask is intended start their work by executing the federated part of the algorithm.

- When finished, the nodes send the results back to the server

- Each node’s results are picked up by the central algorithm. The central algorithm then computes the final result and sends it to the server

- The user retrieves the result from the server.

vantage6-server vs central part of an algorithm

It is easy to confuse the vantage6 server with the central part of the algorithm: the server is the central part of the infrastructure but not the place where the central part of the algorithm is executed. The central part is actually executed at one of the nodes, because it gives more flexibility: for instance, an algorithm may need heavy compute resources to do the aggregation, and it is better to do this at a node that has these resources rather than having to upgrade the server whenever a new algorithm needs more resources.

Challenge 2: Who computes what?

Two centers \(A\) and \(B\) have the following data regarding the age of a set of patients:

\(a = [34, 42, 28, 49]\)

\(b = [51, 23, 44]\)

Each center has a data station and we want to compute the overall average age of the patients.

Given that the central average can be computed by summing up all the values and dividing the sum by the number of values, using the following equation:

\(\overline{x} =\dfrac{1}n \sum_{i=1}^{n} x_i = \dfrac{34+42+28+49+51+23+44}7\)

To make it ready for a federate computation, the equation can be rewritten as the sum of the elements of A plus the sum of the elements of B, divided by the number of elements of A plus the number of elements of B:

\(\overline{x} =\dfrac{1}{n_a+n_b} (\sum_{i=1}^{n_a} a_i+\sum_{i=1}^{n_b} b_i) = \dfrac{1}{4+3}[(34+42+28+49)+(51+23+44)]\)

Can you determine which part of the infrastructure will execute each part of the computation, and which is the result returned by the different parts?

The Server starts the central task on one of the two nodes (e.g. Data station A).

The node A starts two subtasks, one per node. Node A will run the following computation:

\(S_a =\sum_{i=1}^{n_a} a_i = (34+42+28+49)\)

and it will return the following results to the central task:

\(S_a=153\)

\(n_a=4\)

Node B will run the following computation:

\(S_b =\sum_{i=1}^{n_b} a_i = (51+23+44)\)

and it will return the following results to the central task:

\(S_b=118\)

\(n_b=3\)

The central task receives \(S_a\) and \(n_a\) from node A and \(S_b\) and \(n_b\) from node B, and will run the following computation:

\(\overline{x} =\dfrac{S_a+S_b}{n_a+n_b}=\dfrac{153+118}{4+3}=38.71\)

Future developments of vantage6

Back in 2018 when the development of vantage6 started, the focus was on federated learning. Since then, vantage6 has been extended to support different types of data sources, different types of algorithms and improved its user experience. Privacy Enhancing Technologies (PET) are a rapidly evolving field. To keep up with the latest developments, the vantage6 platform is designed to be flexible and to adapt to new developments in the field.

From the development team we are working towards making vantage6 the PETOps platform for all your (distributed) analysis needs.

We identified a number of areas where we want to improve and extend vantage6 in order to achieve this goal:

Policies

Currently, vantage6 lets you set several policies, such as the organizations that are allowed to participate in a collaboration, the algorithms that are allowed to run on the nodes, and the data that is allowed to be used in a collaboration. We want to extend this to a more generic policy framework in which any aspect of the vantage6 platform can be controlled by policies. This will maximize the flexibility of the platform and make it easier to adapt to new use cases.

For example, it would be possible:

- Define the version of vantage6 that is allowed to be used in a collaboration

- Which users is allowed to run a certain algorithm

- Which algorithms are allowed in a collaboration/study

- Define privacy guards at algorithm level

Model Repository

Currently, vantage6 is focused on privacy enhancing techniques. Some of these techniques result in a model that can be used to make predictions. We want to extend vantage6 with a model repository in which these models can be stored, shared and used. This will make it easier to reuse models and to compare the performance of different models.

- vantage6 is an open source platform to execute PET analysis.

- A client is used to interact with the system.

- A vantage6 server orchestrates the execution of algorithms.

- The nodes contain the data and execute the computation.

- The algorithms in vantage6 have a federated part, running on local data, and a central part, aggregating the results.

Content from Running a PET analysis without programming on vantage6

Last updated on 2024-10-15 | Edit this page

Overview

Questions

- How can I perform basic administrative activities on vantage6 using the web-based UI?

- How do I check the status of a specific collaboration or study in the vantage6 UI?

- How do I request a task through the vantage6 UI?

Objectives

- Explore specific data analysis scenarios that further illustrate the concepts introduced in episode 2.

- Understand the UI-based workflow for performing a data analysis on the given scenarios.

Prerequisite

Make sure you completed the Episode 2 where the concepts the UI is based on are introduced.

From theory to practice: a hypothetical case study using vantage6 collaborations

In vantage6 a collaboration refers to an agreement between two or more parties to participate in a study or to answer a research question together. This concept is central to the Privacy Enhancing Technologies (PETs) that vantage6 supports. Each party involved in a collaboration remains autonomous, meaning they retain control over their data and can decide how much of their data to contribute to the collaboration’s global model and which algorithms are allowed for execution.

To illustrate this in practice, you will work on a simulated collaboration scenario: an international consortium project of multiple health research institutes, working together on two studies:

Age-Related Variations in Overweight Prevalence: A Comparative Study Across Gender and Age Groups (AGOT2024) .

The Effect of Gender on Height Development Across Various Age Groups (GGA2024).

The first study, AGOT2024, involves the analysis of age and weight-related data available on a subset of the institutions participating in the collaboration. Likewise, GGA2024 involves the analysis of age and height-related data from a different (and potentially overlapping) subset of the collaboration’s participants. In this Episode you will play the role of a researcher of one of the institutions that conform the consortium. As seen on the previous Episode, this means that you will be able to perform data analysis on the whole collaboration, or on any of the two studies defined for it. The following illustrates the kind of collaboration you will be part of (yours may have a different number of nodes, with different names and study configurations).

The consortium members already took care to ensure that their corresponding datasets follow the same structure (variable names and data types). This is key for making the federated analysis possible. The following is an example of how the ‘default’ database on all the nodes within the collaboration look like.

| gender | age | height | weight | isOverweight | ageGroup |

|---|---|---|---|---|---|

| M | 39 | 152 | 108 | False | 30 - 40 |

| M | 8 | 118 | 106 | False | 0 - 10 |

| M | 16 | 161 | 110 | True | 10 - 20 |

| M | 94 | 110 | 115 | True | 90 - 100 |

| M | 47 | 117 | 152 | True | 40 - 50 |

| F | 29 | 127 | 110 | True | 20 - 30 |

| M | 5 | 95 | 65 | False | 0 - 10 |

| M | 39 | 142 | 196 | False | 30 - 40 |

| F | 20 | 189 | 112 | False | 20 - 30 |

| F | 84 | 145 | 116 | False | 80 - 90 |

Interacting with the v6 server

To perform a data analysis, or any other kind of management activity within the collaboration you are part of, you need to interact with the vantage6 server. As described in Episode 2, the vantage6 server is the central component responsible for managing the entire federated/multi-party computation infrastructure and facilitating communication between the various entities within the vantage6 platform. There are two ways of interacting with the server: either by using a web-based user interface, or by programmatically requesting actions to the server API -the same API that powers the web interface behind the scenes. In this Episode you will perform data analyses on the simulated collaboration scenario using the web-based interface, the most user-friendly one (in Episode 4 you will learn how to configure your own collaborations, and on Episode 5, you will explore how to interact with the server programmatically for more advanced use cases).

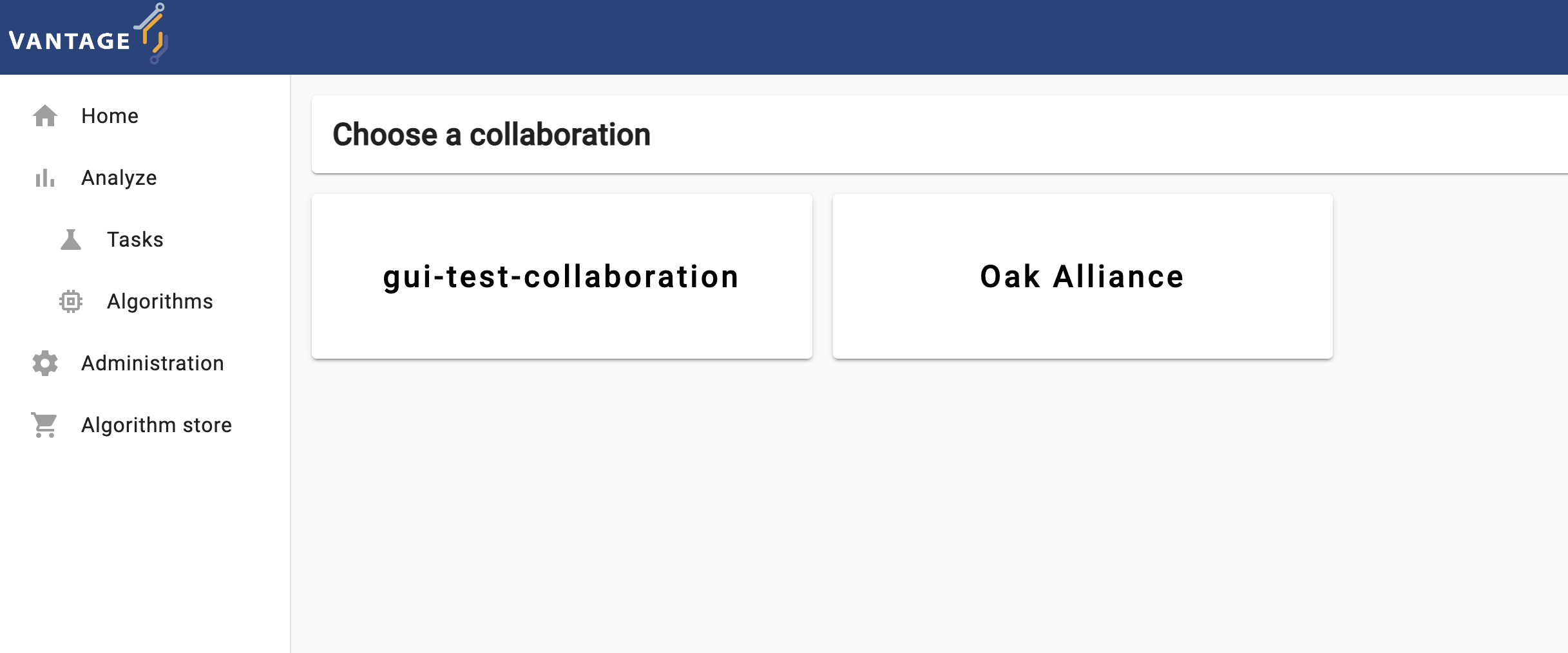

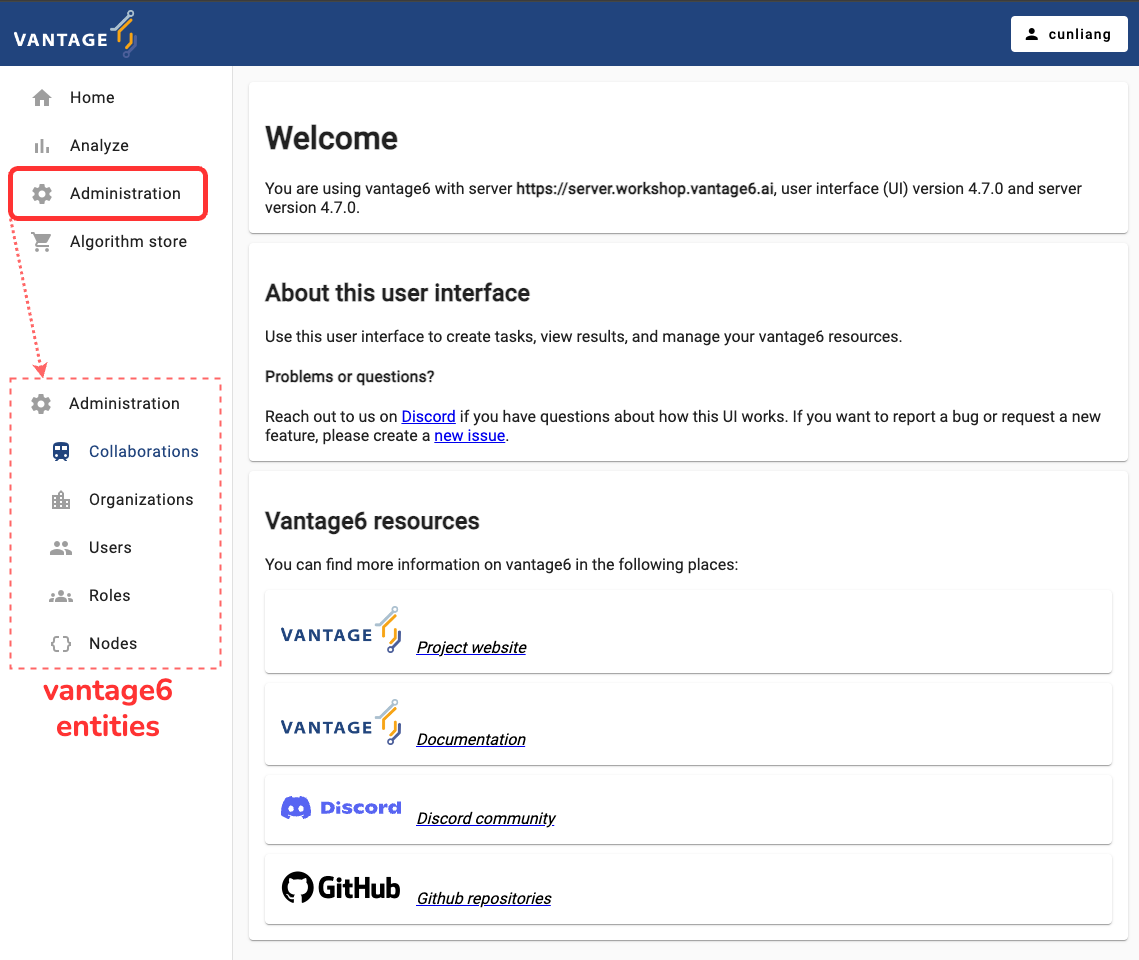

Navigating through vantage6’s UI

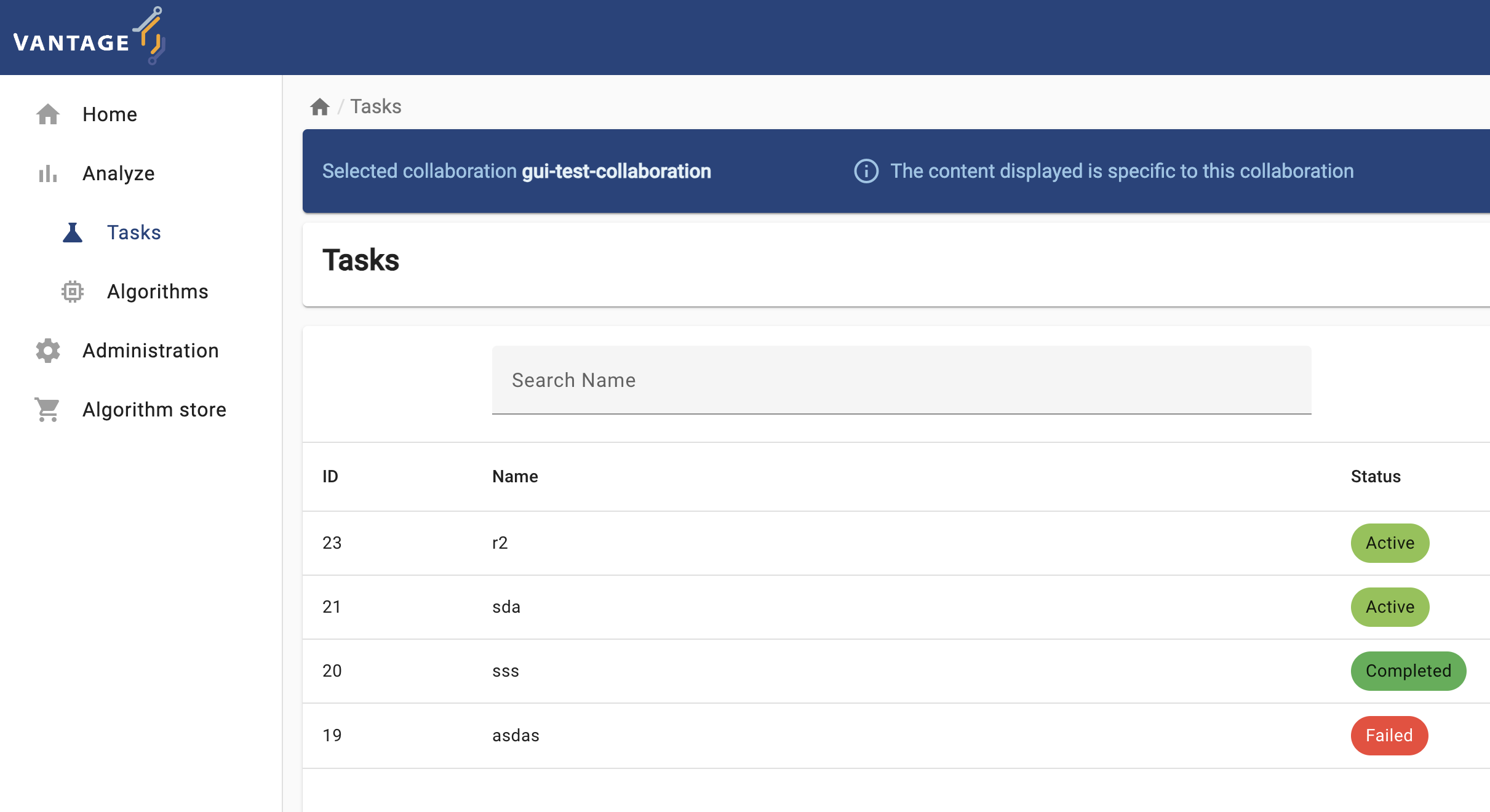

The elements and navigability of vantage6’s UI are based on the concepts introduced in Chapter 2. For instance, as seen on the screenshots below, upon selecting a collaboration on the start page, if you select ‘Tasks’ you will see the status of the tasks created for that particular collaboration.

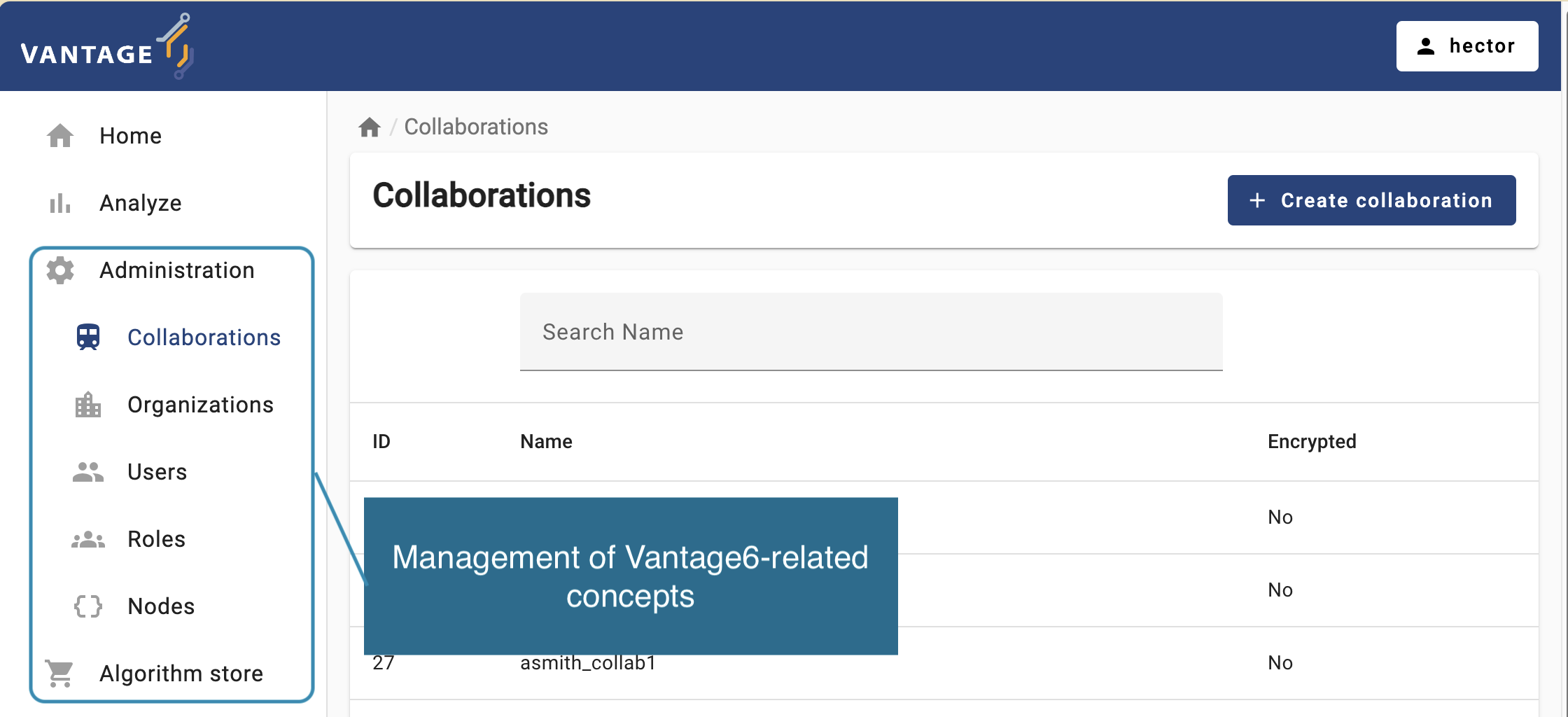

Likewise, expanding the Administration icon in the left

panel will let you choose vantage6 entities youn can manage:

Organizations, Collaborations,

Roles, Users, and Nodes. You can

click on an entity to see more details or to edit the entity.

Getting familiar with the vantage6 UI

To get familiar with vantage6’s UI, you will start with a simple task: edit the details of your own user (the connection details for this activity will be given by the instructors). Log into the UI using the information provided and navigate to the administration page and try to update your email, first name, and last name.

Running a PET (privacy-enhancing technology) through the user interface (no coding involved!)

Now that you are familiar with the UI basics, the next two details you need to figure out as a researcher, in order to perform you analysis are (1) which kind of analysis I need perform, and (2) on which data nodes it will be peformed? There is another important consideration, though: is my collaboration or my studies ready for my analysis? Keep in mind that each node within your collaboration is autonomously managed by the organization it was configured. This means that you although you can include them in your analysis, you can’t control them (they may just be offline for no reason).

Challenge 2: checking the status of the nodes through the UI

With your researcher credentials, explore the collaboration you have access to. Check which organizations are part of it and if they are online. Also check which organizations were assigned to each study (AGOT2024, GGA2924). Based on this:

- Which study is ready for executing an analysis?

- If you need to perform an analysis for the study that is not ready, which organization you would need to contact to fix this situation?

Running a federated algorithm

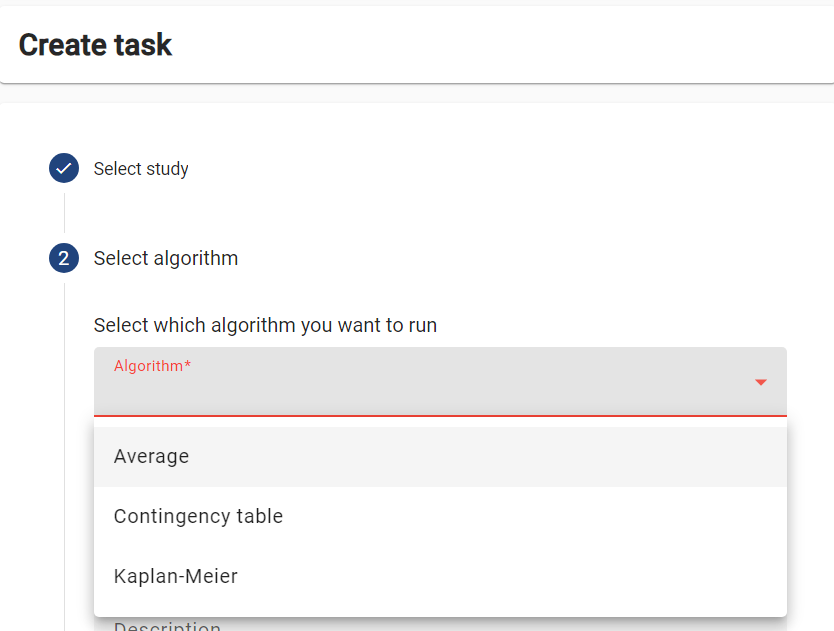

Now you will perform an analysis on the study that is ready for it (i.e., the study with no offline nodes). As this is an introductory exercise, you will first use the simple algorithm introduced in Chapter 2: the Federated Average.

Challenge 3: as a researcher, requesting an algorithm execution! (partial function)

Login with your researcher credentials.

Select

Analyzeon the Administration option from the panel on the left, and then select your collaboration.-

Select

+ Create taskto create a new task on your collaboration.

-

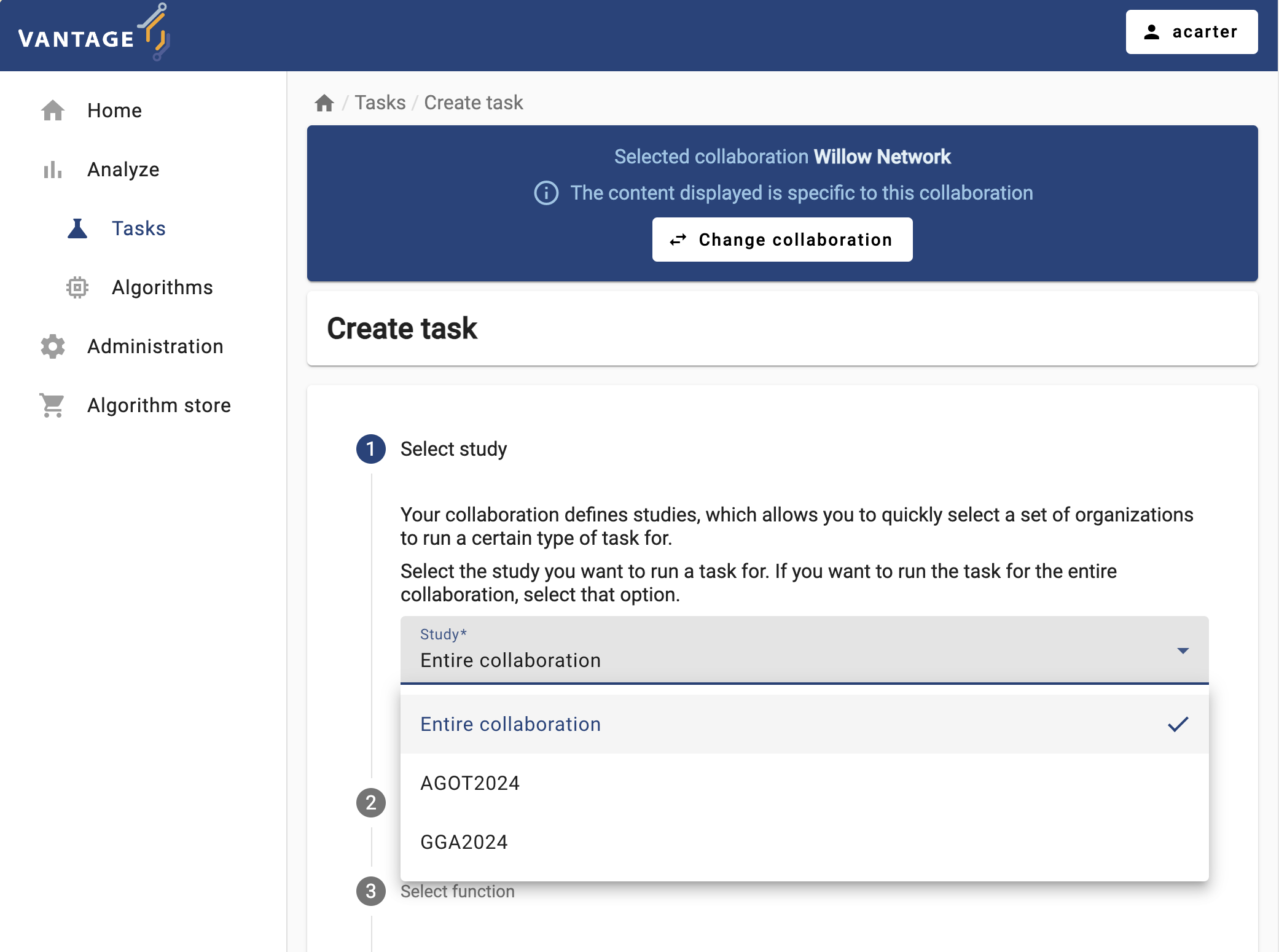

As the first step, you can choose between running the task on the entire collaboration, or on one particular study (i.e., on a subset of the collaboration’s institutions). Choose the study that is ready for an analysis.

-

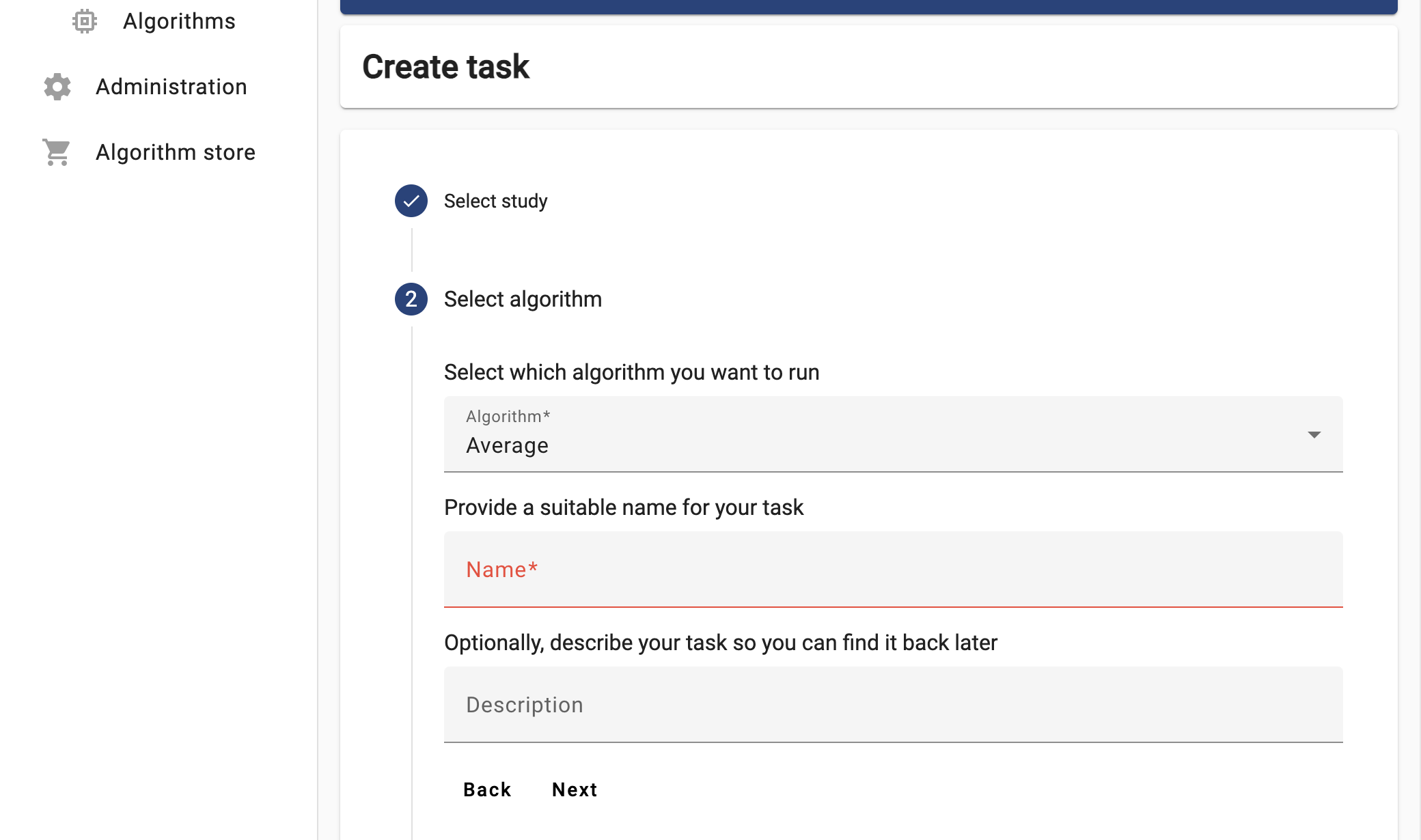

The ‘Average’ algorithm should be listed under the ‘Select which algorithm you want to run’ dropdown menu. Select it, and provide a name and a description.

-

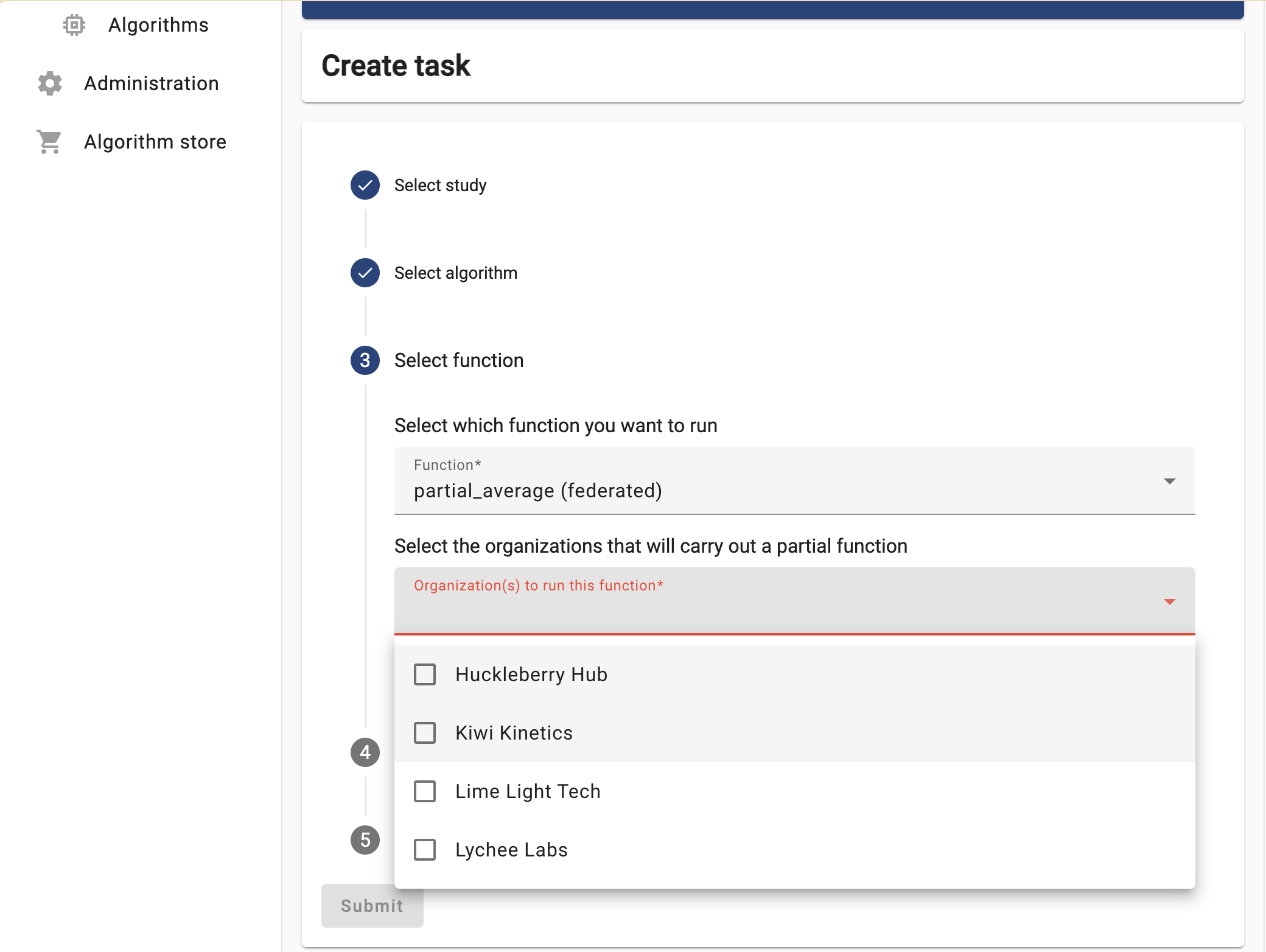

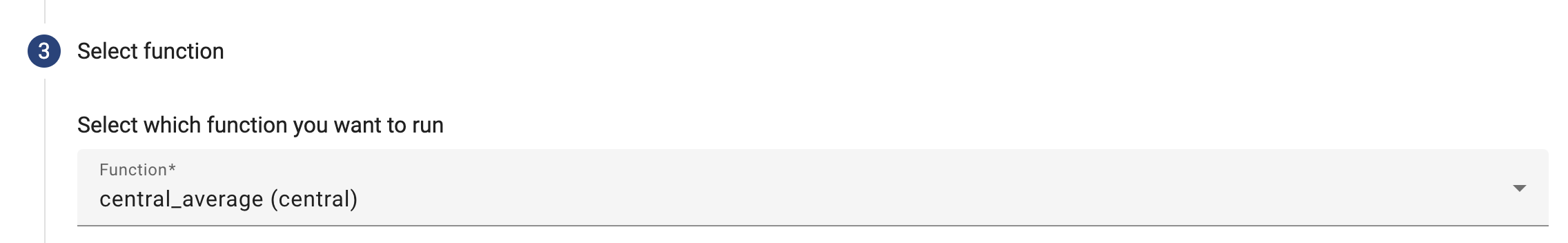

Now the UI will let you choose between the two functions you explored in Challenge #2. For now try to run the

partial_average, selecting ALL the organizations.

Select the ‘default’ database, choose any numerical column relevant for the study you selected, and then click on ‘Submit’.

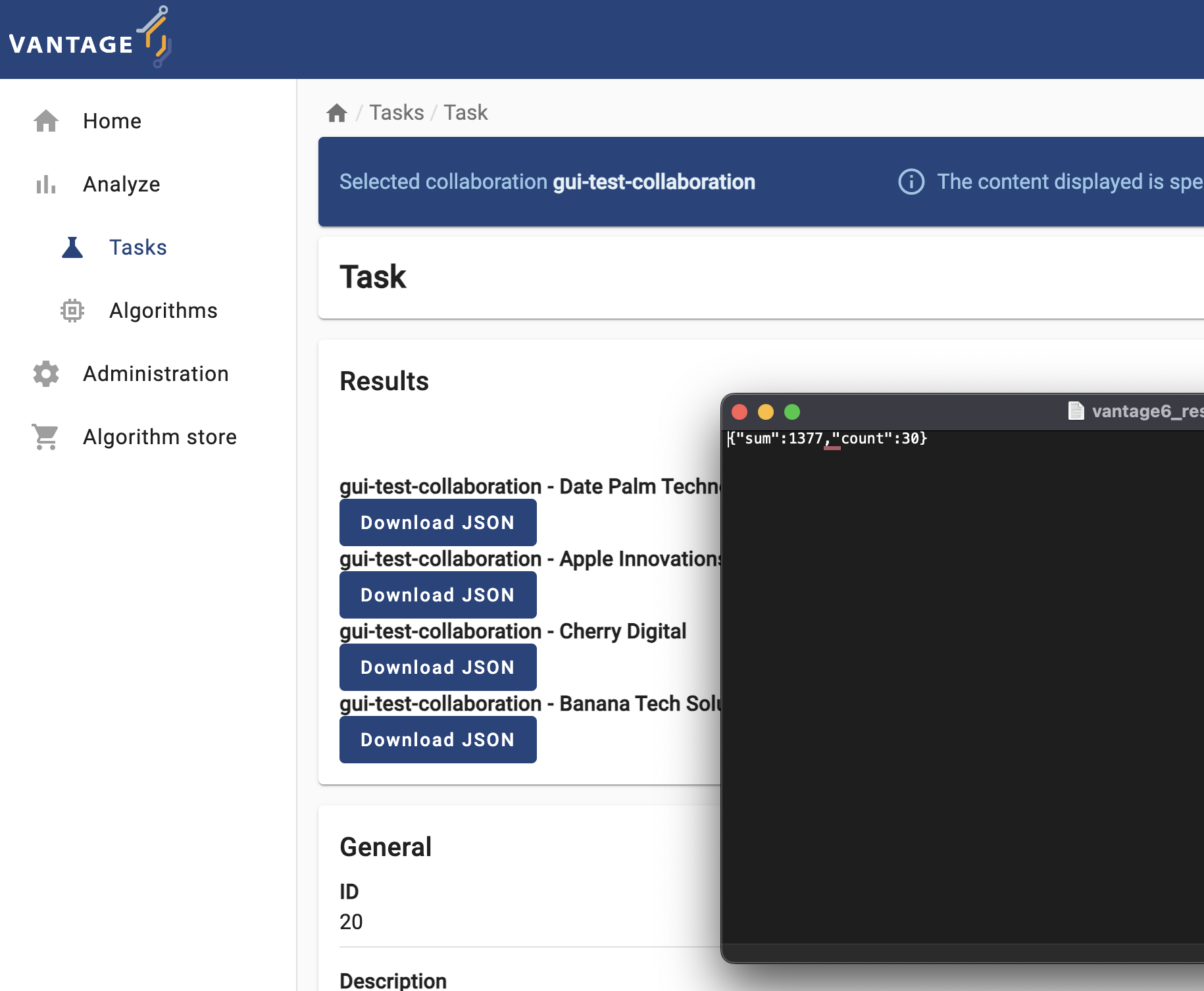

The task you just requested should be listed with a ‘pending’ status. Once finished, download the JSON results and open them on a text editor.

Based on these results, discuss the following:

- What does the content of these files mean? Why the

central_averagefunction is returning this?

Each node, after executing the partial_average function,

returns the two values needed by the central function of the ‘federated

average’ algorithm (as described in Chapter 2): the number of records

within the database, and their sum. These values are ‘encoded’ as a JSON

document, so they can be read, programatically, by the

central_average.

Challenge 4: let’s see what the central function does!

Repeat the same process from Challenge 3 (again, on the Study that is ready for it), but this time choose the ‘central’ function. As you see, when choosing this function only one organization can be selected.

Once again, wait for the process to finish and check the JSON results. Keep an eye on the Tasks section, and see how the processes are created.

Discuss the following:

There is a node that appeared twice in the processes list during the algorithm execution: in the Main process and on the Child processes list. Take a look at the source code of the algorithm you have just executed. Can you spot in the code why this happened?

Can you identify, within the same source code, where the data you saw on Challenge 3 was created?

Given the source code above, why does the

central_averagefunction, unlikepartial_average, not get any data as an input?

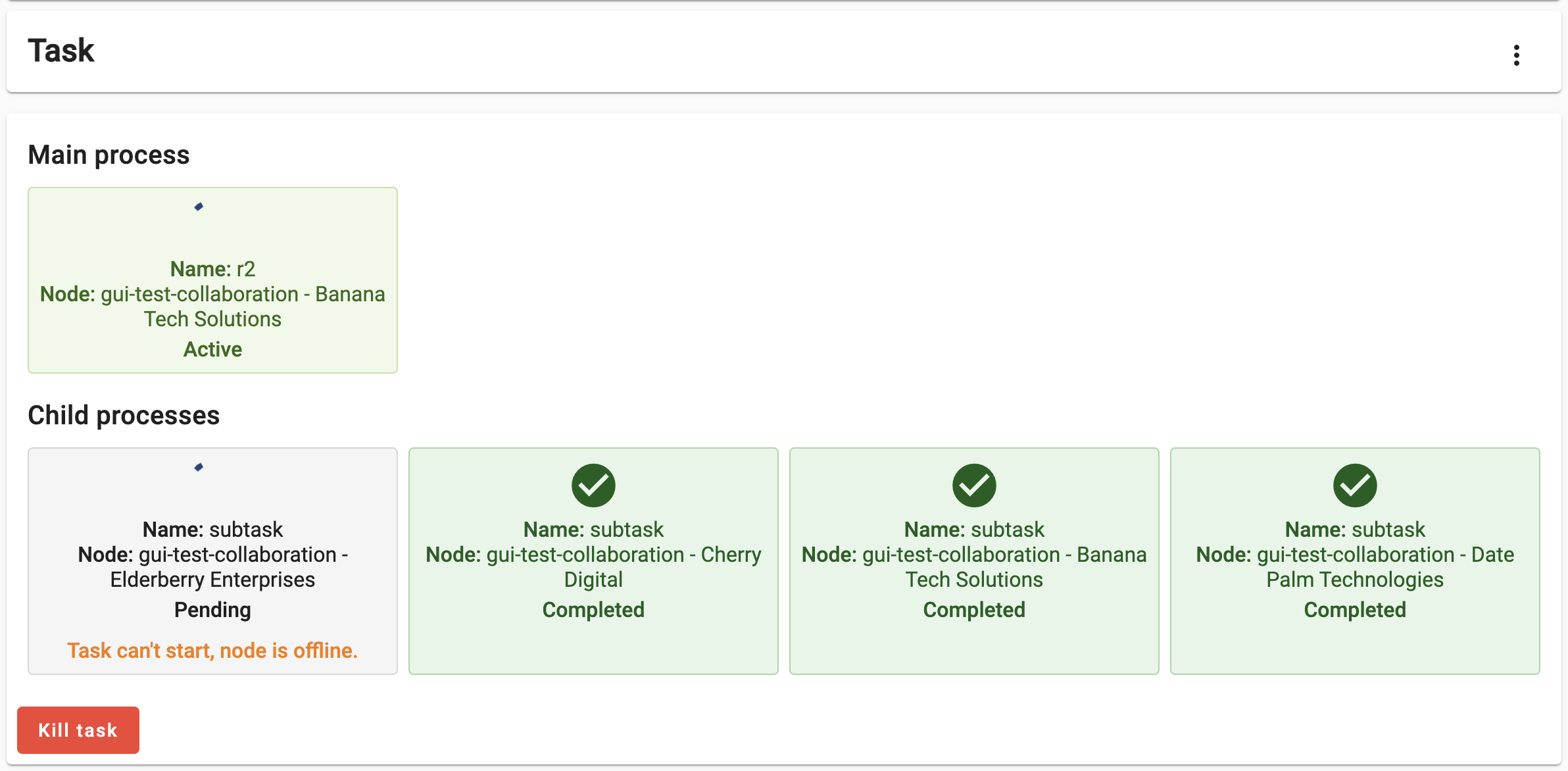

In this exercise you created a task for a ‘central’ function, which, when executed requests other nodes to run a ‘partial’ one, combining their results upon completion. The central function is designed in a way that it make the request to all the nodes within the collaboration/study. As the node that gets the request to execute the ‘central’ function, is also part of the collaboration, it ends executing two tasks: the central task, and the partial one.

Here, the ‘partial’ part of the algorithm encodes its result as the JSON document seen on the previous challenge.

The

central_averagefunction is designed just to aggregate the results of the partial averages sent by the other nodes. Hence, it doesn’t need direct access any dataset.

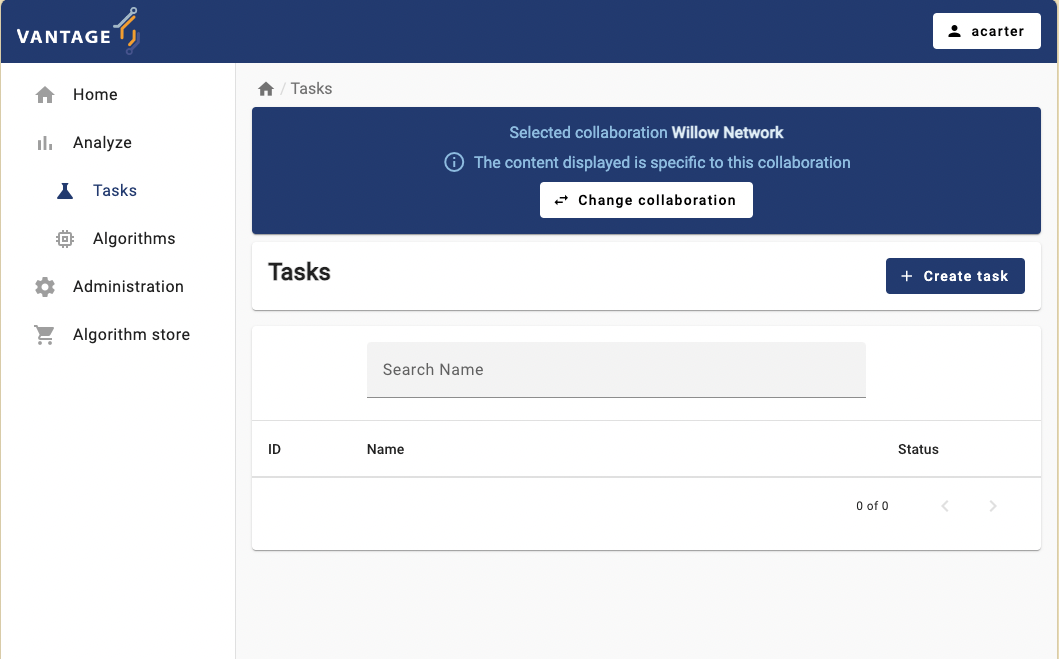

Challenge 5: handling problems through the UI!

Based on your current understanding of the federated average algorithm, speculate on what would happen if you run the ‘central’ function of this algorithm on a study that includes ‘offline’ nodes. Once you have made your prediction, validate it by repeating the process from the previous challenge, this time using the study with the ‘offline’ node.

Discuss the following:

- What happened with the Task? What can you do about it with the UI?

The algorithm didn’t crash, but is kept on hold (with the Active status) indefinitely. The Central task requests all the nodes in the study to run the ‘partial’ function. As the server is unable to transfer this request to the offline node, this child process is kept on hold, until the node is back online.

Consequently, the Main process is also kept on hold, and the process stays with an ‘Active’ status indefinitely (or until the node is back online).

Challenge 6: handling problems through the UI, again.

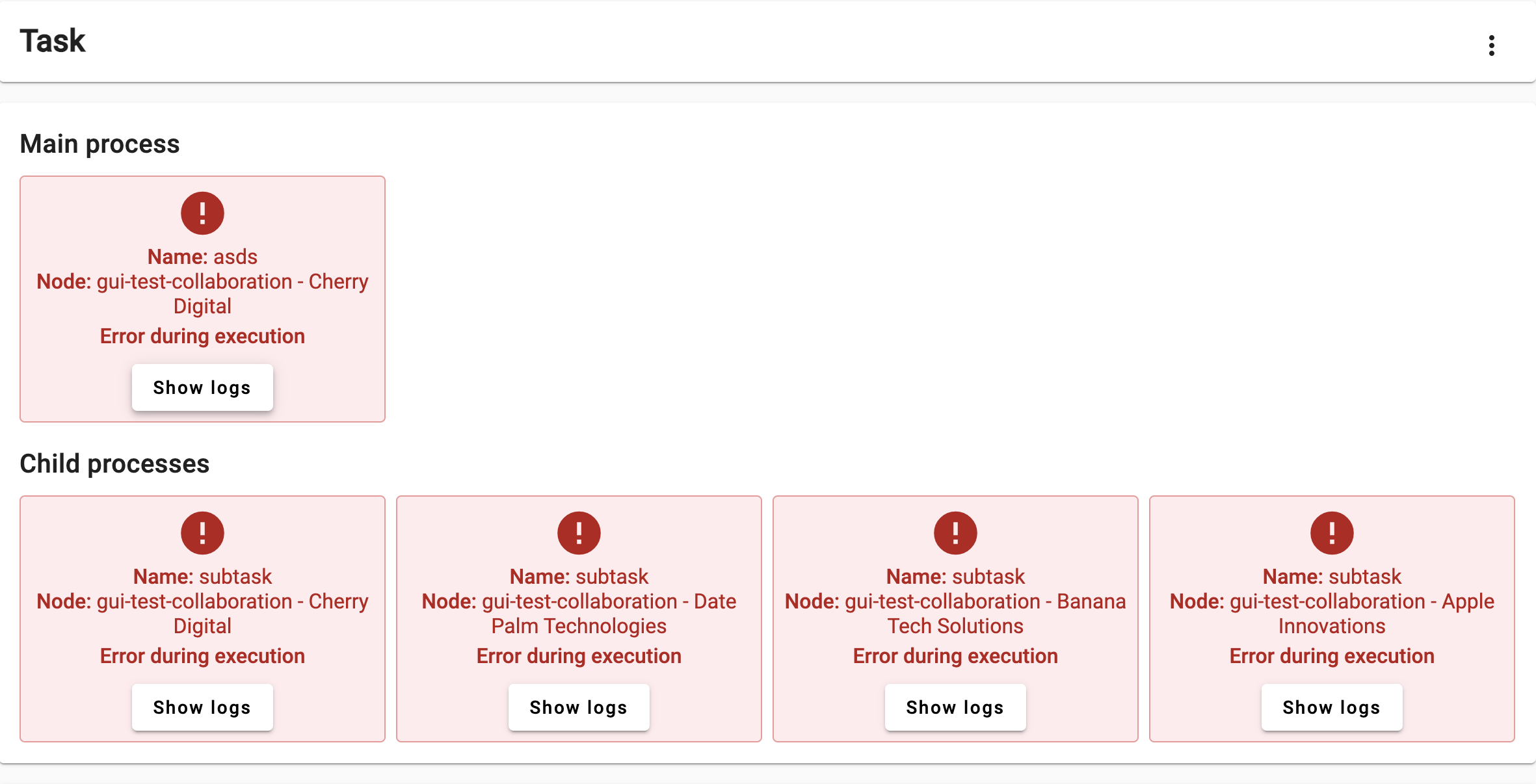

This time, let’s try to do something that may make the federated average algorithm not work as expected. Create a task, this time selecting the ‘operational’ study (the one with all of its nodes online), and use the central function in it. This time, choose a non-numerical variable (see the table sample).

Look at the logs and discuss the following:

- Why did both

partial_functionandcentral_functioncrash? (See source code)

- The

partial_functioncrashed while trying to parse the input as a number. Thecentral_functioncrashed due to a division-by-zero.

Challenge 7: Play around with other algorithms!

See if you can run a Kaplan-Meier analysis or Crosstab analysis on the same study.

- Go to analyze, and create a new task as before

- Select a different algorithm from the dropdown menu

- Follow the same steps as before to create a task

You will encouter different input parameters and output for each algorithm.

Content from Managing vantage6 server via the user interface

Last updated on 2024-09-24 | Edit this page

Overview

Questions

- What is the permission system in vantage6?

- What are the default roles in vantage6?

- How to create a new organization using vantage6 user interface (UI)?

- How to create a new user using vantage6 UI?

- How to create a new collaboration using vantage6 UI?

Objectives

- Understand the permission system of vantage6

- Understand the default roles in vantage6

- Be able to create a new organization using the vantage6 UI

- Be able to create a new user using the vantage6 UI

- Be able to create a new collaboration using the vantage6 UI

Vantage6 permission system

Vantage6 uses a permission system to control who can do what in the system. The permission system is based on roles, which are collections of rules that define the permissions of a user. A user can have multiple roles, and the permissions of the user are defined by the assigned rules.

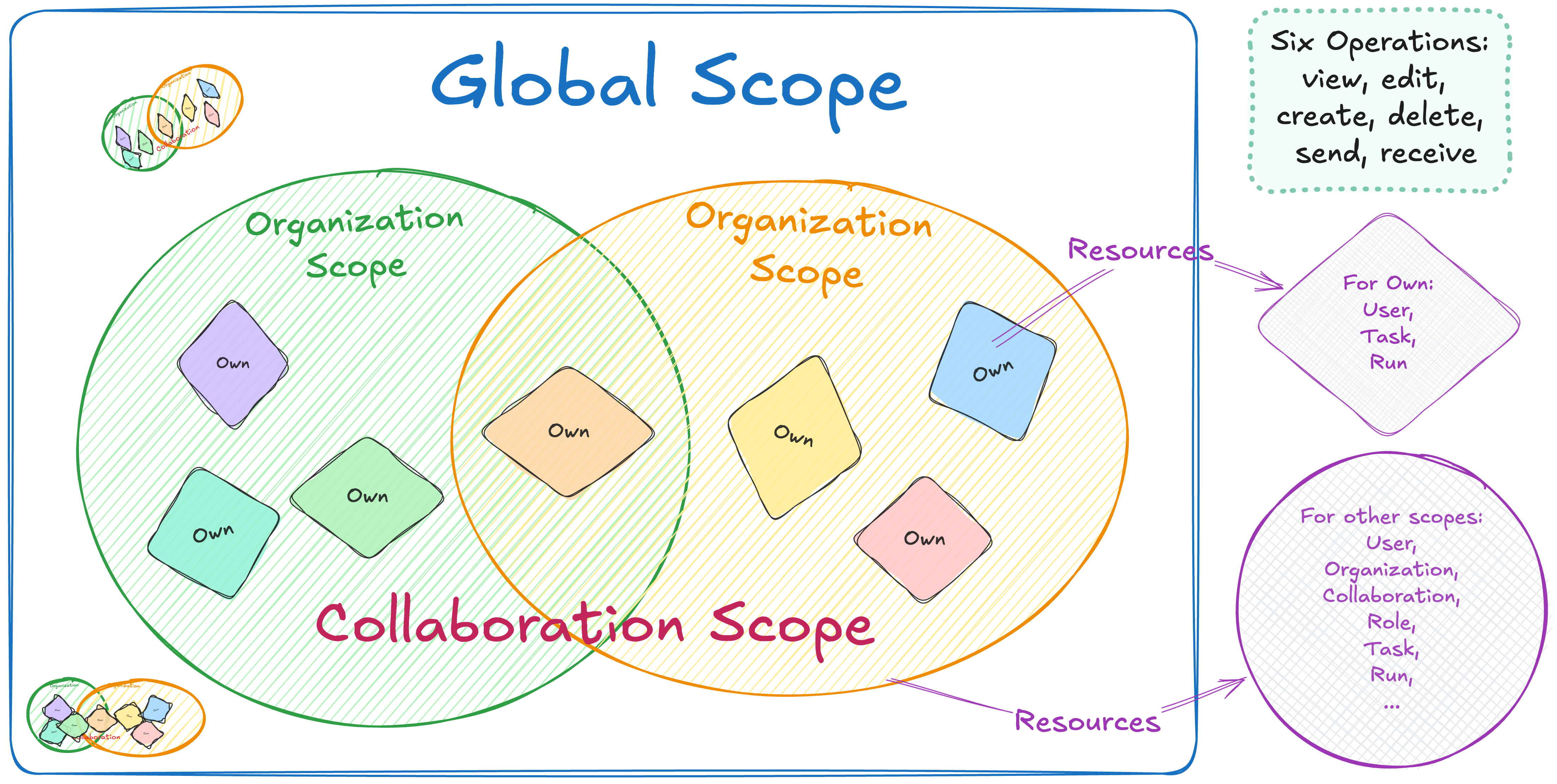

The permission rules define what each entity is allowed to do, based

on the operation (view, create, edit, delete, send, receive), the scope

(own, organization, collaboration, global), and the resource

(e.g. users, organizations). Users can be assigned anywhere between zero

and all of these permission rules. For example, having the rules with

create in the scope organization for the

resource user means that the user can create users for the

organization they belong to.

There are six operations: view, edit, create, delete, send and receive. The first four correspond to GET, PATCH, CREATE and DELETE requests, respectively. The last two allow users to send and receive data via socket events. For example, sending events would allow them to kill tasks that are running on a node. For more details about Send and Receive operations, see the vantage6 documentation.

The scopes are:

- Global: all resources of all organizations

- Organization: resources of the user’s own organization

- Collaboration: resources of all organizations that the user’s organization is in a collaboration with

- Own: these are specific to the user endpoint. Permits a user to see/edit their own user, but not others within the organization.

Note that not every resource has all scopes and/or operations. For

example, the collaboration resource does not have

create operation for the organization scope,

as it does not make sense to create a collaboration that only your own

organization can participate in.

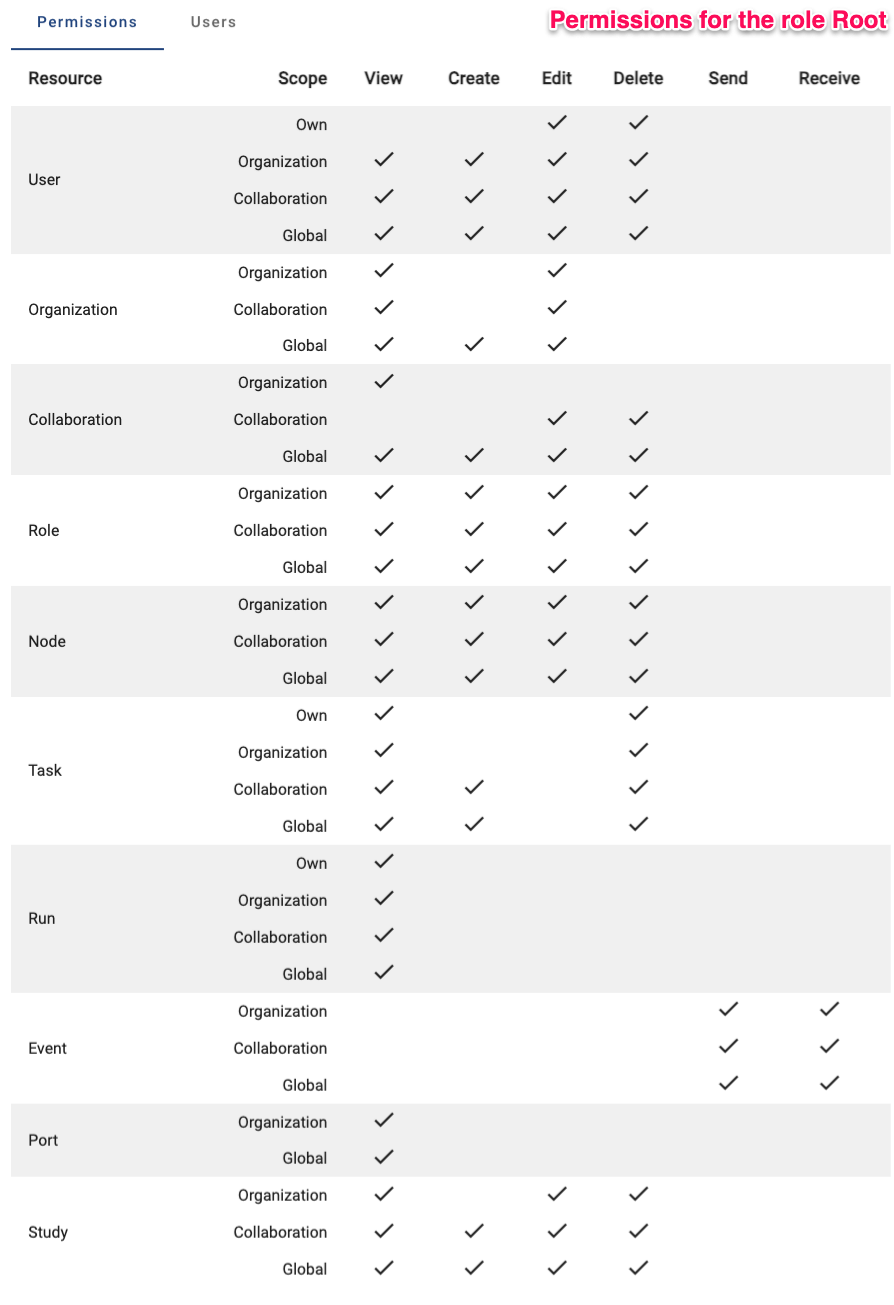

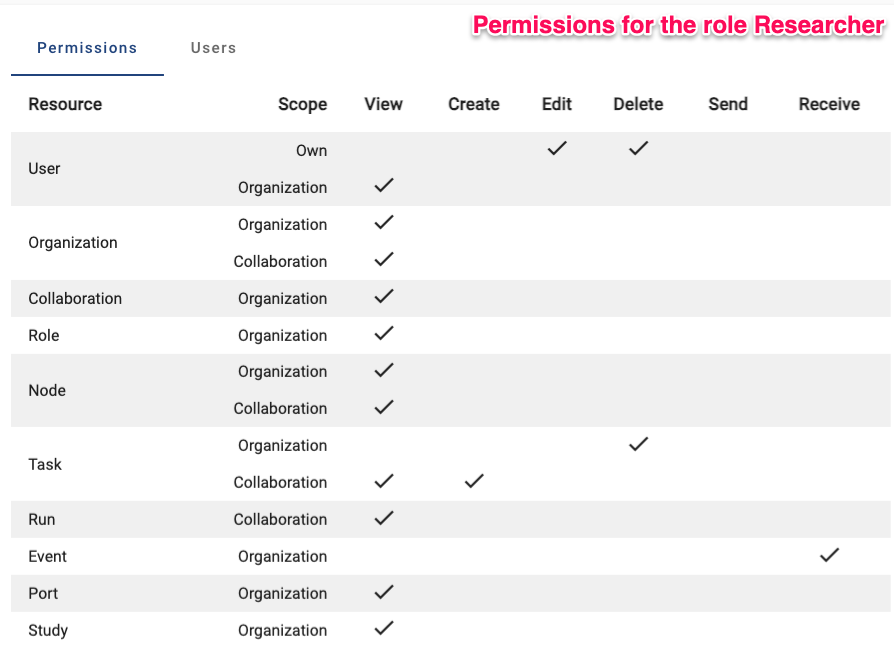

To make it easier to assign permissions, there are also predefined roles:

- Root: has all permissions (👉 see image below)

- Collaboration Admin: can do almost everything for all organizations in collaborations they are a member of

- Organization Admin: can do everything for their own organization

- Researcher: can view the organization’s resources and create tasks (👉 see image below)

- Viewer: can only view the organization’s resources

The permissions are set up in the Roles tab in the

administration page. You can click on a role to see the permissions of

that role. You can also create a new role by clicking the

Create role button.

The permission structure allows for a lot of flexibility, but it can be complex for beginners to set up. The default roles provide a quick way to set up permissions, but it is recommended to review them before using them in a project.

Challenge 1: What can you do in vantage6?

Go the administration page in the vantage6 UI, check the permissions that you have, and answer the following questions:

- What is your role in vantage6?

- Do you have the permissions to create a new organization, a new user or a new collaboration?

- Do you have the permission to remove an existing organization, a user, or a collaboration?

- Check it with your instructor.

- Yes, you should be able to create a new organization, a new user, and a new collaboration. If not, ask your instructor to give you the necessary permissions.

- Check it with your instructor.

Manage vantage6 project using the UI

In this section, we will go through the steps to create a new organization, a new user and a new collaboration using the vantage6 UI.

First you need to log in to the vantage6 UI, and then go to the

Administration page. You can find the

Administration tab in the left side of the start page.

Clicking on the Administration tab will show you all tabs

for vantage6 entities.

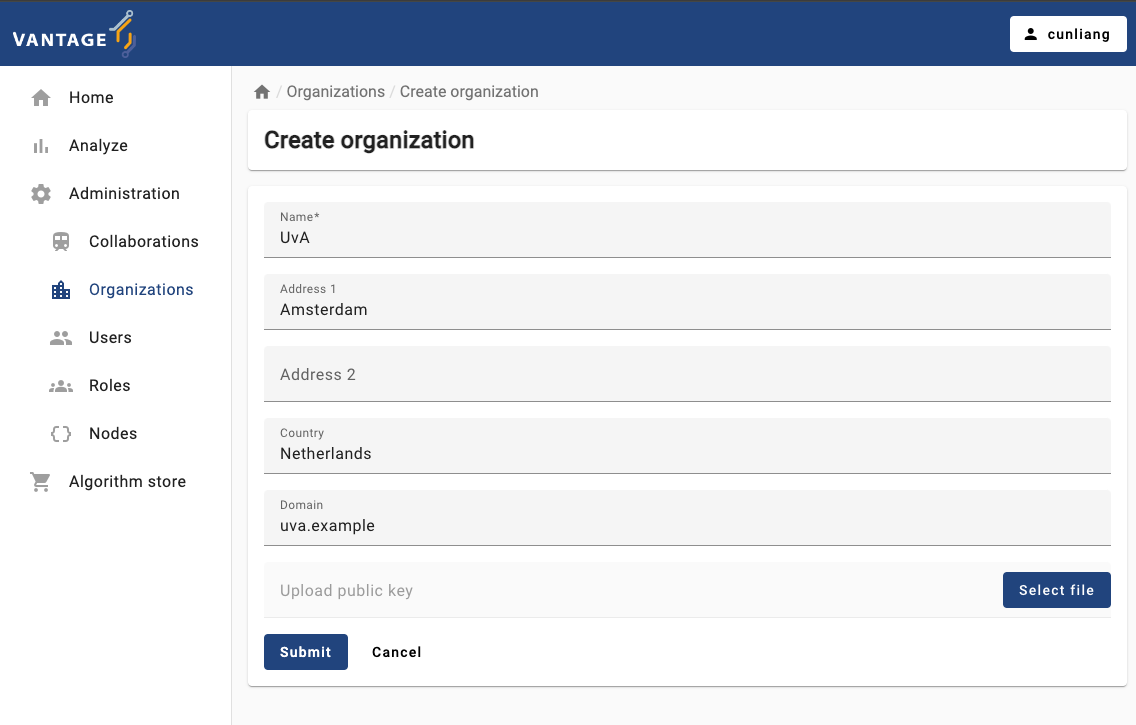

Create a new organization

It’s quite straightforward to create a new organization in vantage6. Here are the steps:

- Click on the

Organizationstab in the administration page. - Click on the

Create organizationbutton. - Fill in the details of the new organization.

- The

Upload public keyfield is optional. You can upload a public key for the organization if you want to use encryption in the collaboration. But note that we DO NOT use encryption in this course. For more details about encryption, see the vantage6 documentation.

- The

- Click on the

Submitbutton to create the new organization.

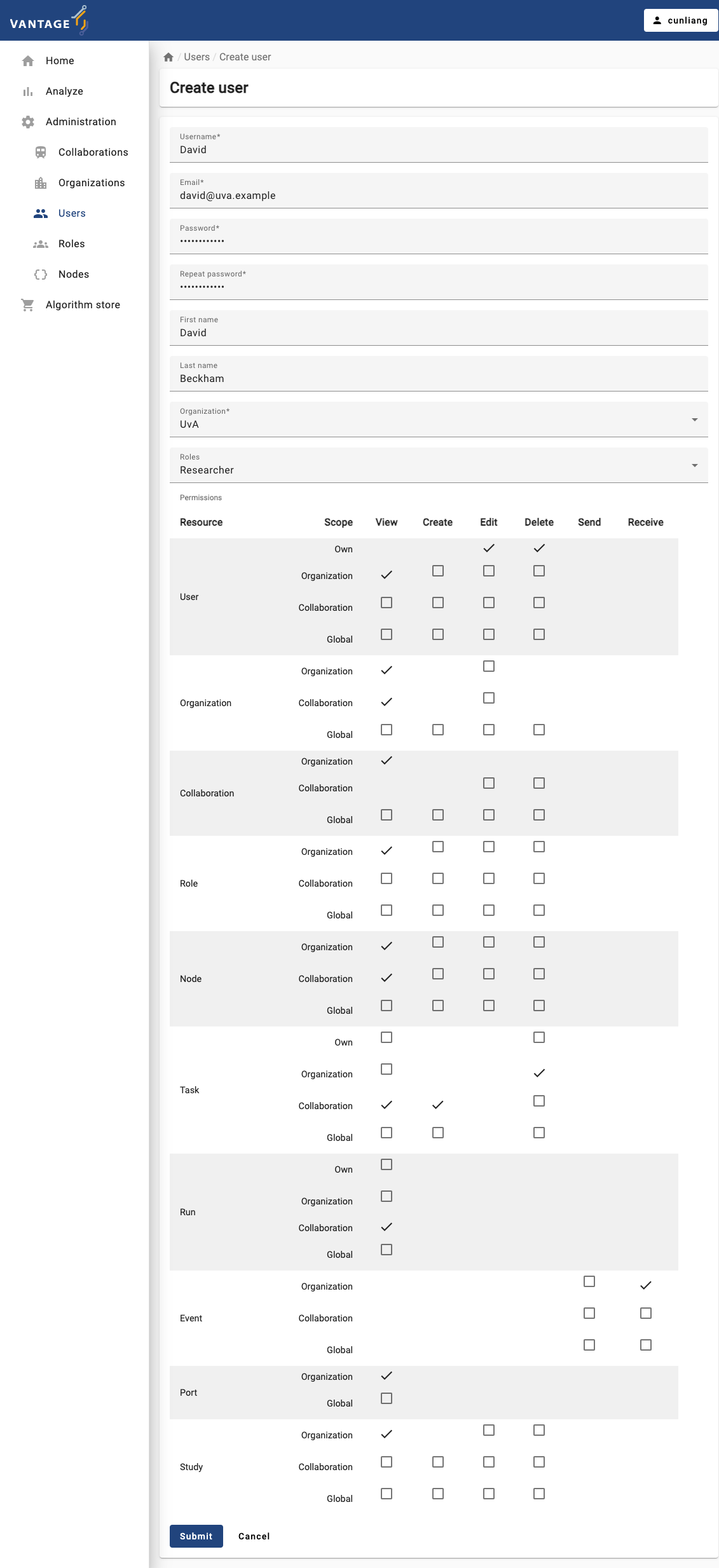

Create a new user

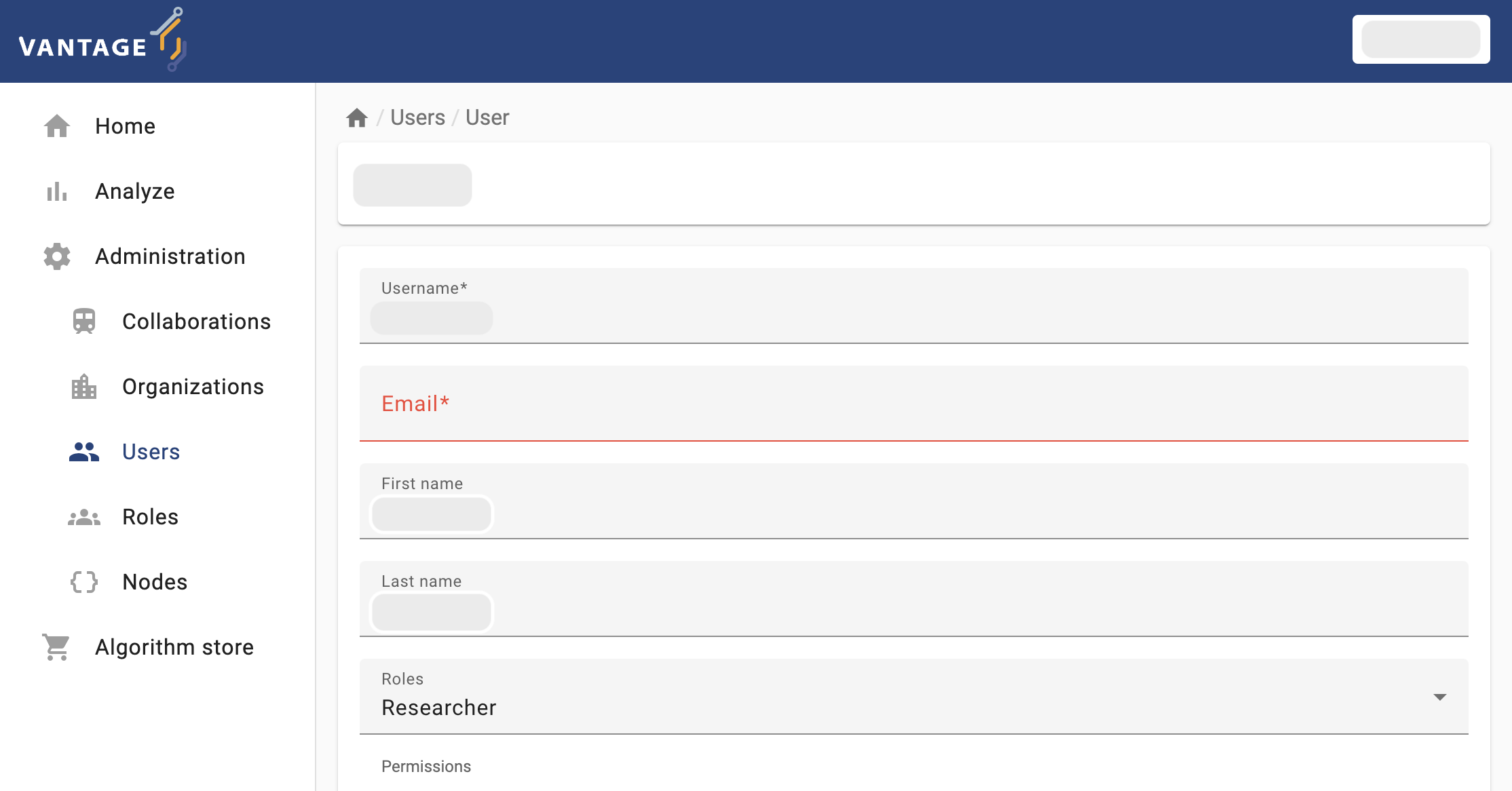

Now let’s create a new user for the organization we just created. Here are the steps:

- Click on the

Userstab in the administration page. - Click on the

Create userbutton. - Fill in the details of the new user.

- You can assign the user to an organization by selecting it from the

Organizationdropdown. Only one organization can be selected. - You can assign roles to the user by selecting them from the

Rolesdropdown. Here we assign theResearcherrole to the new user. You can give the user more permissions by assigning multiple roles or select the operation boxes in thePermissionssection.

- You can assign the user to an organization by selecting it from the

- Click on the

Submitbutton to create the new user.

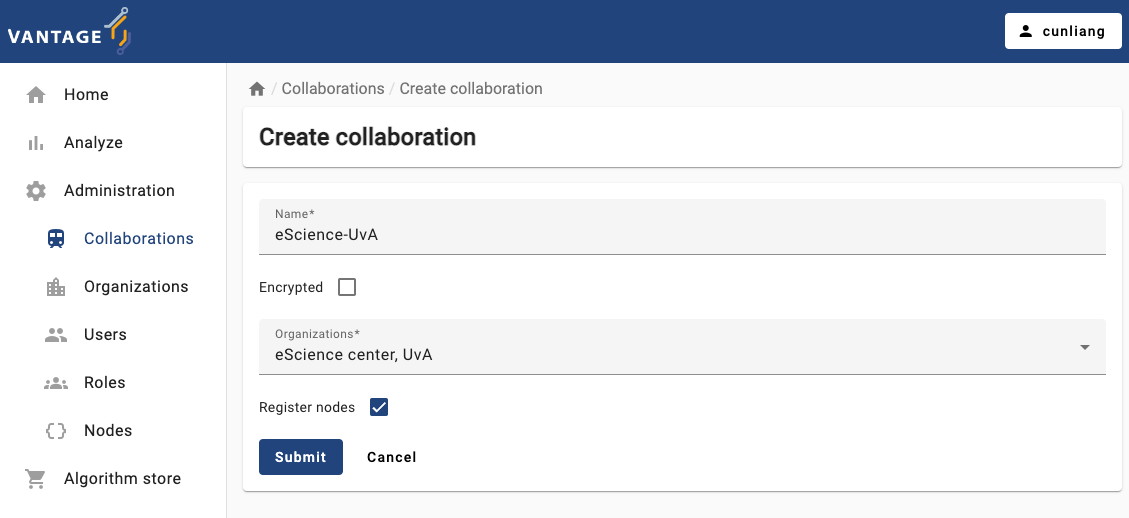

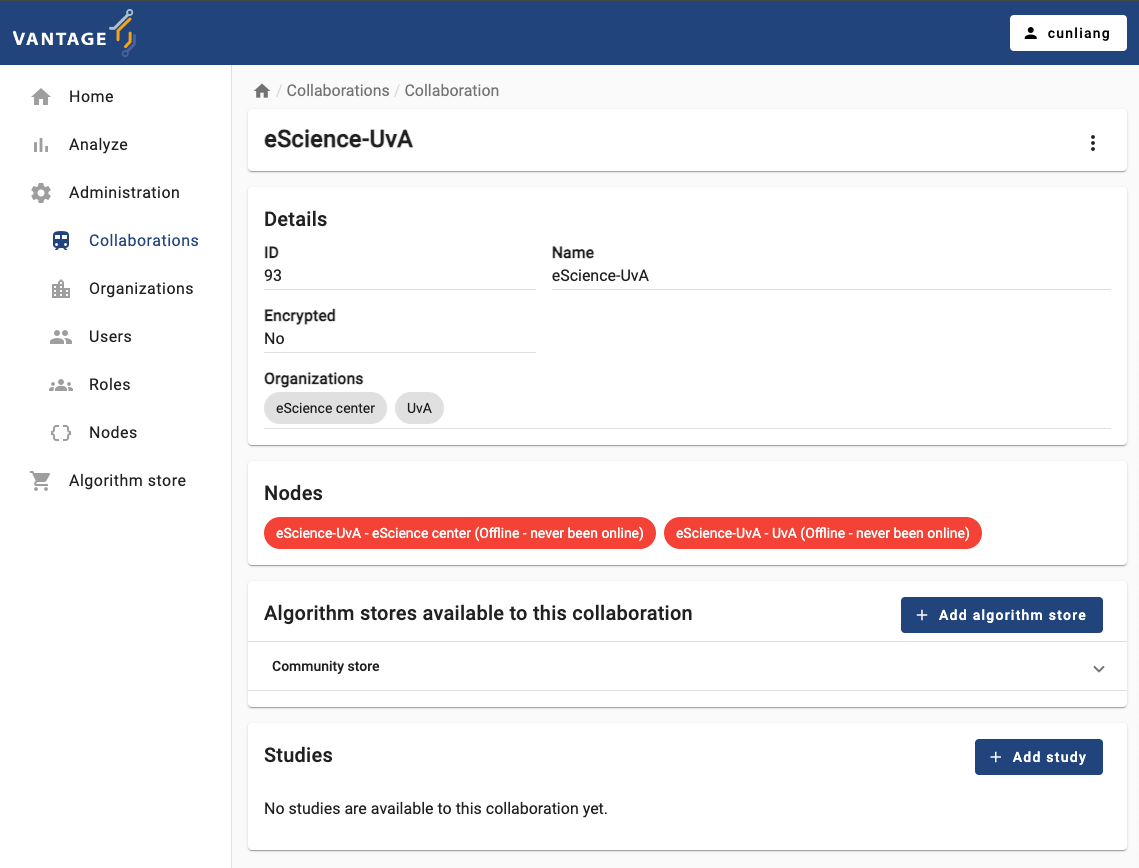

Create a new collaboration

A collaboration is a collection of one or more organizations. Let’s create a new collaboration between two organizations. Here are the steps:

- Click on the

Collaborationstab in the administration page. - Click on the

Create collaborationbutton. - Fill in the details of the new collaboration.

- For

Encryptedbox, you can select whether the collaboration should be encrypted or not. - You can select the organizations that will participate in the

collaboration by selecting them from the

Organizationsdropdown. - By default, we select the

Register nodesbox. This will ensure the nodes of the organizations are registered in the collaboration. If you don’t select this box, you will have to register the nodes manually later.

- For

- Click on the

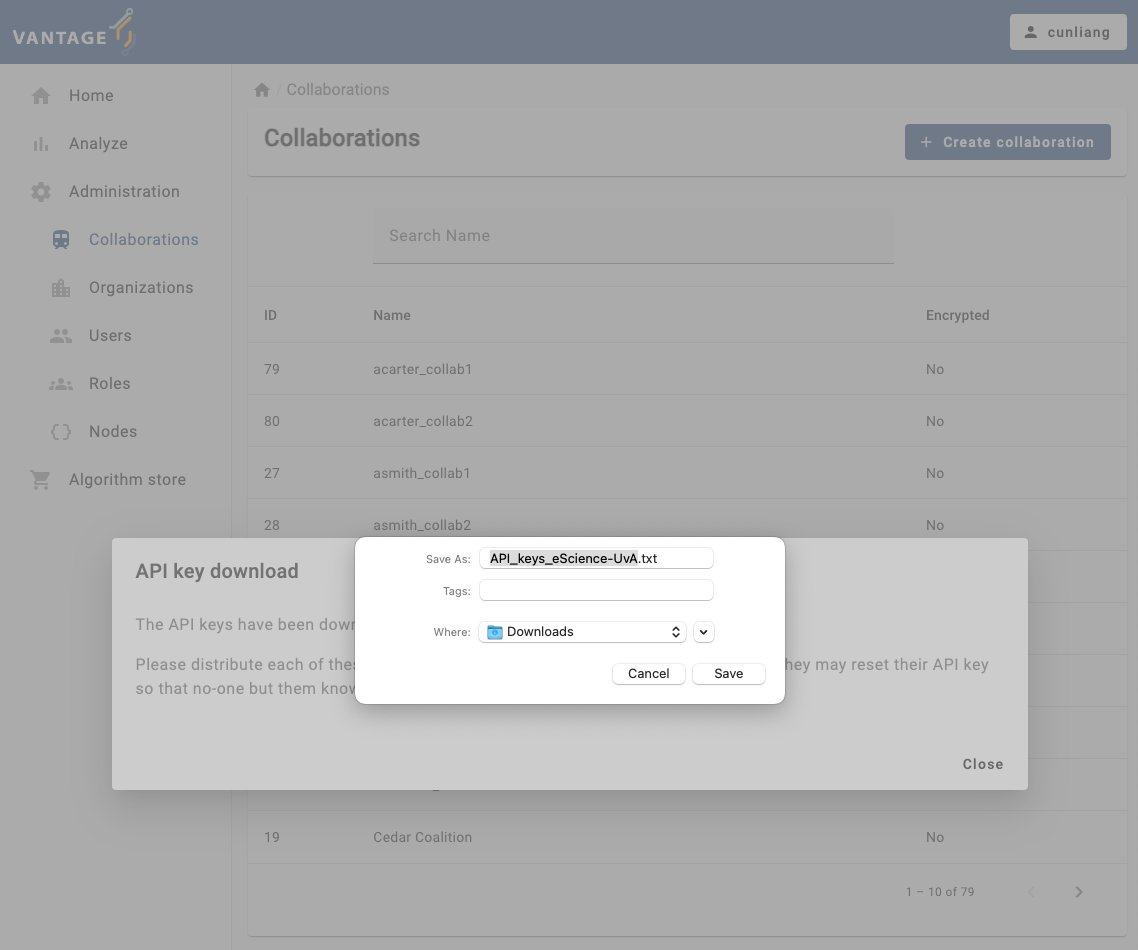

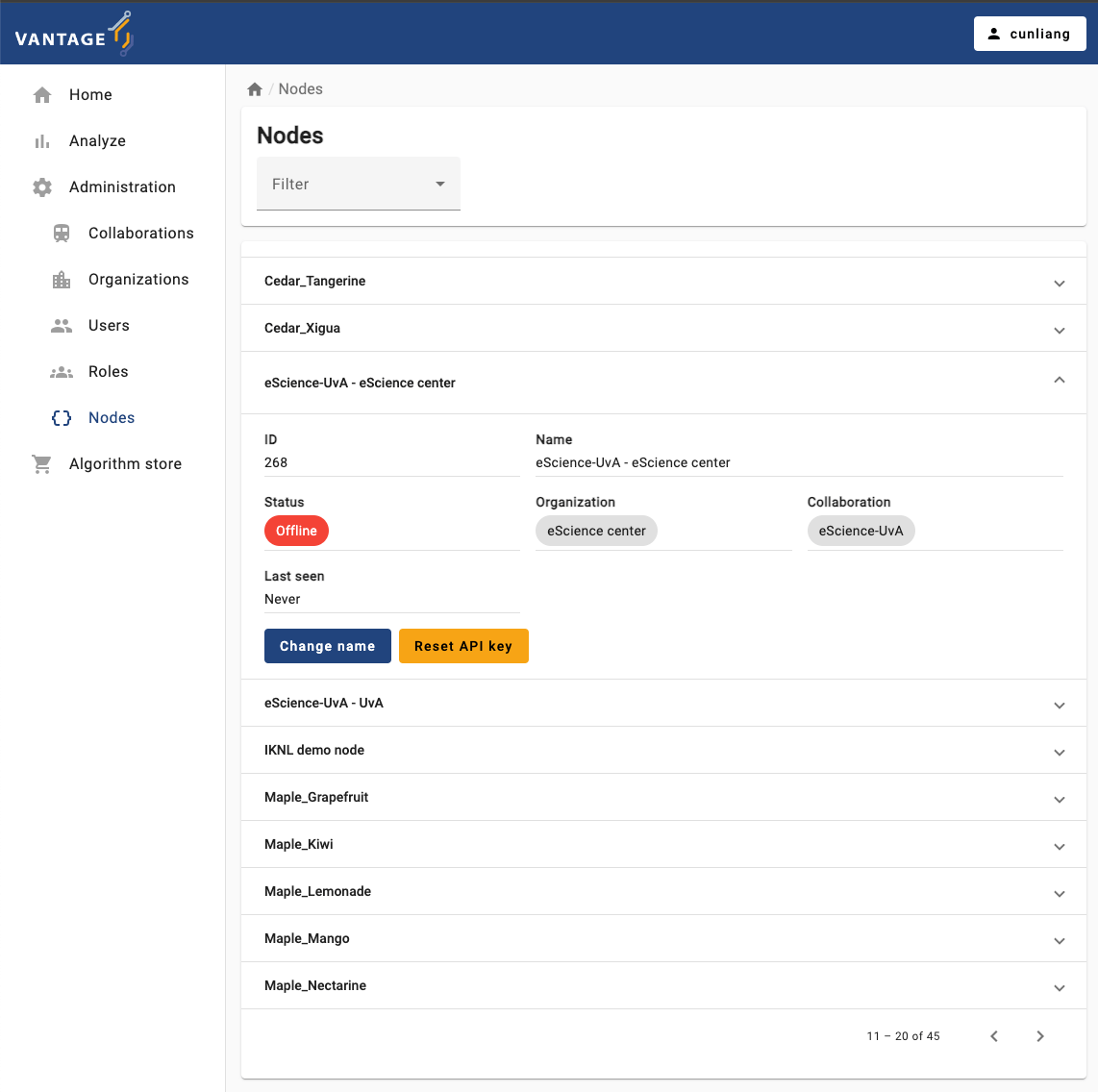

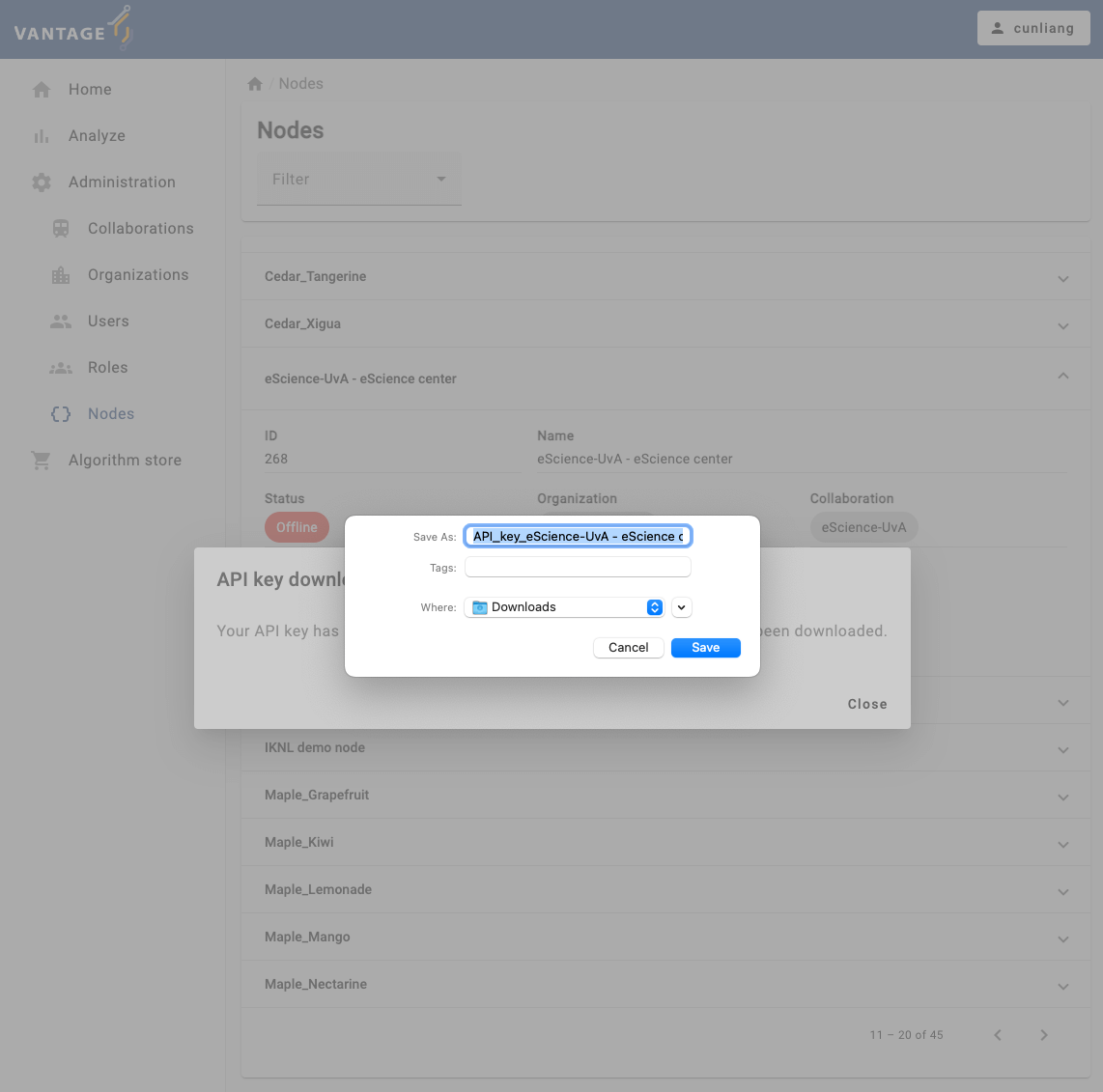

Submitbutton to create the new collaboration.- After submitting the form, you may see a dialog box to ask you to download the API key (on MacOS) or a dialog points out that the API key has been downloaded (on Windows). The API key is used to authenticate the nodes in the collaboration.

You will see a message:

API key download

The API keys have been downloaded.

Please distribute each of these keys privately to each of the organizations. Note that they may reset their API key so that no-one but them knows it.

You will need the API keys when you run the nodes to authenticate with the vantage6 server. Please save the API keys properly, we will need it in Chapter 6.

- After creating the collaboration, you can see the details of the

collaboration by clicking on the specific collaboration listed in the

Collaborationstab.- You will see what organizations are participating in the collaboration.

- You will see the nodes of the organizations that are registered in

the collaboration. In case one or more organizations did not register

their nodes when creating or updating the collaboration, you can

register them manually by clicking the

Register missing nodesbutton. Note that this button is not visible if all nodes are registered. - You can also see the algorithm store available for the

collaboration. You can add a algorithm store for the collaboration by

clicking the

Add algorithm storebutton. - You can also see the studies of the collaboration. You can add a

study by clicking the

Add studybutton.

Challenge 2: Manage vantage6 project using the UI

Go to the administration page in the vantage6 UI, and do the following:

- Create a new role

- Create a new user and grant them the new role you created

- Re-login to the vantage6 UI with the new user account and check if they have the permissions you granted them

- Vantage6 uses a permission system to control who can do what in the system.

- Vantage6 has default roles like

Root,Collaboration Admin,Organization Admin,Researcher, andViewer. - Vantage6 UI can be used to manage the entities of vantage6, like creating or deleting an organization, a user, or a collaboration.

Content from Running a PET analysis using the Python client

Last updated on 2024-10-03 | Edit this page

Overview

Questions

In the context of the Python client:

- How to connect to the vantage6 server?

- How to explore the

Clientobject? - How to check details of a collaborations?

- How to start a compute task?

- How to collect the results of a finished computation?

Objectives

After completing this episode, you will be able to:

- Understand the basic concepts of the vantage6 Python client.

use the Python client to …

- Connect to the vantage6 server.

- Use the Python client to get details of a collaboration.

- Create a task using the Python client.

- Collect the results of a finished computation using the Python client.

Prerequisite

Make sure you completed the Setup Episode before starting this episode. Some basic knowledge of Python is also required to complete the exercises in this episode.

The Python client

The vantage6 Python client is a library designed to facilitate interaction with the vantage6 server, to perform various tasks such as creating computation tasks, managing organizations, collaborations, users, and collecting results. It is a versatile alternative to the web-based user interface we have used in previous lessons.

Data scientists and administrators may use it to manage resources programatically. For example, to automate actions or integrating them on other applications. The Python client communicates with the REST API (wikipedia.org) of the vantage6 server, handling encryption and decryption where applicable.

Besides the Python client, there is also an R client (github.com) available. This client is more focused on starting federated analysis and does not provide tools to manage the server. Important to note that this client is poorly maintained and lags behind in terms of features. Therefore we do not recommend using it. You can find more information in the documentation (docs.vantage6.ai).

If your organization uses a different programming language, you can always create a client in that language by following the API documentation (docs.vantage6.ai).

In this workshop, we will only use on the Python client.

Connect & authenticate

Creating an instance of the vantage6 Python client is relatively straightforward. The user defines server connection details: server address, login credentials, and the organization’s private key in case encryption (docs.vantage6.ai) is used in the collaboration. In case the server has two-factor authentication (docs.vantage6.ai) (2FA) enabled, you should also enter the corresponding time-based 6-digit code accordingly.

To avoid leaking your username and/or password by accident, they can

be defined in a separate Python file (e.g., config.py),

which is then imported into the main script. This way, the main script

does not contain any sensitive information.

PYTHON

server_url = "https://<vantage6-server-address>"

server_port = 443

server_api = "/api"

username = "MY USERNAME"

password = "MY PASSWORD"

# Path to the private key, if encryption is enabled. Can be None if

# encryption is not used. Note that this key is the organization's

# private key. In case of this workshop we do not use encryption, so

# this can be None.

organization_key = NoneOnce you have created the Python module with the configuration settings, you can import it and create the client instance as follows:

PYTHON

from vantage6.client import Client

# It is assumed here that the `config.py` you just created is in the current

# directory. If it is not, then you need to make sure it can be found on

# your PYTHONPATH

import config

# Initialize the client object, and authenticate

client = Client(config.server_url, config.server_port, config.server_api,

log_level='info')

client.authenticate(config.username, config.password)

# In the case of 2FA, you should also include the 6-digit code:

# client.authenticate(config.username, config.password, '123456')

# In case encryption is used, this line can be used to set the organizations private

# key.

# client.setup_encryption(config.organization_key)1. Connect 🌍!

Connect to the vantage6 server using the Python client!

- Create the

config.pywith the your credentials and connection details. - Create a cell with the

clientscript with the code above. - Run the

clientcell as defined above to create the client instance. - Make sure to use the correct user / password

- Check the output to see if there are any errors

Make sure you have created the

config.pyfile with your credentials and theclientcell with the code above.-

Run the

clientcell. If the connection is successful, you should see the message--> Succesfully authenticated:Welcome to _ __ | | / / __ ____ _ _ __ | |_ __ _ __ _ ___ / /_ \ \ / / _` | '_ \| __/ _` |/ _` |/ _ \ '_ \ \ V / (_| | | | | || (_| | (_| | __/ (_) | \_/ \__,_|_| |_|\__\__,_|\__, |\___|\___/ __/ | |___/ --> Join us on Discord! https://discord.gg/rwRvwyK --> Docs: https://docs.vantage6.ai --> Blog: https://vantage6.ai ------------------------------------------------------------ Cite us! If you publish your findings obtained using vantage6, please cite the proper sources as mentioned in: https://vantage6.ai/vantage6/references ------------------------------------------------------------ ... --> Succesfully authenticated

Using the client

The Client instance offers a set of attributes that

correspond to the vantage6

server resources (Episode 3) also described in the official

documentation (docs.vantage6.ai). The available attributes are:

| Resource | Description |

|---|---|

client.user |

Manage users including your own user details |

client.organization |

Manage organizations or the organization that you are part of |

client.rule |

View all available permission rules |

client.role |

Manage roles (are collections of rules) |

client.collaboration |

Manage collaborations |

client.task |

Create new tasks and view their run data |

client.result |

Obtain results from the tasks |

client.util |

Provides utility functions for the vantage6 Python client. For example to reset your password |

client.node |

Manage nodes |

client.store |

Manage algorithm stores |

client.algorithm |

Manage algorithms that can be used for the computations |

Method and parameter documentation

There are many methods available in each of the resources and each

method has its own set of parameters. To know which parameters are

available for a specific method, you can use the help()

function in Python. For example, to get the documentation of the

client.organization.list() method, you can use the

following command:

OUTPUT

list(self, name: 'str' = None, country: 'int' = None, collaboration: 'int' = None, study: 'int' = None, page: 'int' = None, per_page: 'int' = None) -> 'list[dict]'

List organizations

Parameters

----------

name: str, optional

Filter by name (with LIKE operator)

country: str, optional

Filter by country

collaboration: int, optional

Filter by collaboration id. If client.setup_collaboration() was called,

the previously setup collaboration is used. Default value is None

This shows you that you can filter the list of organizations (among

others) by name, country, and collaboration. It is also possible to

request documentation of a higher level method, for example

help(client.organization) or even

help(client).:

To view all Client functions and their arguments without

using help() you can use the official

documentation (docs.vantage6.ai). Which is the same as the Python

client’s docstring.

Make sure you are viewing the documentation of the version of the client you are using. You can find the version of the client by one of the following commands:

or by running the following command in the terminal:

Permissions

Note that the authenticated user may not be allowed to perform all operations or view all resources. For example, a user may not be allowed to create a new organization, but may be allowed to list the organizations within all collaboration its organization participates in. The server will only allow the user to perform operations on the resources that the user has permission to perform.

The 5 basic operations

Almost all of the resources provide a get, list, create, update and delete operation.

When using the get and list methods a

dictionary is returned with the requested information. In the case of

the create and update methods typically the

created resource is returned. Finally in the case of delete

nothing is returned but a message is printed to confirm the

deletion.

2. Collect collaboration details

Before starting a task, you need to know the details of the collaboration you are working with. Use the Python client to get the details of the collaboration you have access to. Write down the name and ID of each collaboration.

Use help(client.collaboration.list) to see which

arugments you can use to filter the collaborations.

OUTPUT

[

{'id': 168, 'name': 'Lychee Labs', ...},

{'id': 158, 'name': 'Pineapple Paradigm', ...},

{'id': 155, 'name': 'Huckleberry Hub', ...},

{'id': 140, 'name': 'Mango Matrix', ...},

{'id': 128, 'name': 'Apple Innovations', ...},

{'id': 170, 'name': 'eScience center', ...},

{'id': 165, 'name': 'Grapefruit Group', ...},

{'id': 145, 'name': 'Raspberry Revolution', ...},

{'id': 136, 'name': 'Ivy Berry Solutions', ...},

{'id': 166, 'name': 'Huckleberry Holdings', ...}

]Additional operations

Some resources do not provide all five operations and some resource provide additional operations. For example:

- it is not possible to create new rules. In other words:

client.rule.createdoes not exist. - the

client.taskhas aclient.task.killmethod which is able to stop a task that currently is running.

Top level methods

Up untill now we only discussed Client methods that are

bound to an resource. There are also some methods that are not bound to

a specific resource. Examples are:

-

client.authenticate()to authenticate the user. You have already used this method when you authenticated to the server. -

client.setup_encryption()to setup the encryption. -

client.wait_for_results()to wait for the results of a task.

3. Find documentation

Find the documentation on how to reset your password 🔑 in case you

forgot it. You can use the help() to explore the client

functions.

Have a look at the client table, and see if you can find a resource group that might contain the method you are looking for.

Use the help() function to find the documentation of the

client.util resource.

Identifiers are key

It is important to note that the Python client use identifiers rather than names to select resources. For example, to filter the organizations that belong to a a given collaboration, you need to know the collaboration’s identifier first.

In a previous challenge, you were asked to get the details of the collaborations you have access to. This is common practice when working with the vantage6 Python client.

It is also possible to obtain these identifiers through the UI. However, when working with the UI, identifiers are not as important as the names can be used to identify the resources. But also for the UI, it is important to know the identifiers of the resources as names are not always unique.

The reason for this is that the UI is designed to more be user-friendly, and it is expected that users will interact with the resources using their names. Typically they will select the resources from a list or dropdown. Since names do not always have to be unique (e.g. task names) it is impossible to identify a resource uniquely by its name.

Now that we understand the basic concepts of the vantage6 Python

client, let us get some more details about our collaborations. First, as

before, we collect the details of the collaborations we have access to.

We do so by specifying an additional parameter fields to

the list() method. This parameter allows us to specify

which fields we want to see in the output. This makes it more readable

and easier to find the information we are looking for.

OUTPUT

[

{'id': 12, 'name': 'Birch Brotherhood'},

{'id': 9, 'name': 'Pine Partners'},

{'id': 11, 'name': 'Cedar Coalition'},

{'id': 10, 'name': 'Maple Consortium'},

{'id': 15, 'name': 'demo'},

{'id': 8, 'name': 'Oak Alliance'},

{'id': 14, 'name': 'Redwood Union'},

{'id': 13, 'name': 'Willow Network'}

]Then, we are interested in all the organizations that participate in

one of the collaborations. Lets assume that the collaboration ID is 1.

We then can get the organizations that are part of this collaboration by

using the client.organization.list() method with the

collaboration parameter set to 1.

OUTPUT

[

{'id': 171, 'name': 'IKNL'},

{'id': 172, 'name': 'new_organization'}

]Write down the ID of each organization and collaboration. You will need them in the next challenges.

Creating a new task

Before starting an analysis we need several details about the collaboration and the analysis to be performed. This includes the organization and collaboration identifiers, we have just collected.

Before we start the analysis, let us check if everything is in place:

Network

- ✔ Connect to the vantage6 server using the Python client.

- ✔ Use the Python client to get the details of the collaboration and its organizations you have access to.

- ⚠ Check the status of the nodes

Average Algorithm

- ✔ published at:

harbor2.vantage6.ai/demo/average. - ✔ We are going to use the

partial_average()function. - ✔ The function requires a

column_nameparameter, we are setting this to ‘age’.

Node status

As the checklist above indicates, we have not checked the status of the nodes. You can start an analys when nodes are offline, they will start the analysis once they are online. In case a node is offline, you might need to inquire with the node owner to get it back online.

4. Check the status of the nodes

Use the Python client to check the status of the nodes that are part of the collaboration you are interested in.

To check the status of the nodes, you can use the

client.node resource.

See help(client.node.list) to see how to get the details

of the nodes of a given collaboration. You can also use the

fields parameter to get only the information you are

interested in.

You can obtain node details by using the

client.node.list() method. To filter the nodes you are

interested in, you can use the collaboration parameter in

the client.node.list() method:

You can also specify the fields parameter to get only

the information you are interested in:

You can also use the is_online parameter to filter the

nodes that are online. But for now it would be good to see both online

and offline nodes.

OUTPUT

[

{

'id': 155,

'name': 'IKNL demo node',

'status': None

}

]One of the nodes in our collaboration is offline. In the real world,

you would need to contact the node owner to get the node back online.

But for the purpose of this workshop we have defined a study

that contains only online nodes. Which has a name that ends with

Subset. You can find the study ID by using the

client.study.list() method. Write down the ID of the

study.

Task definition

A task in vantage6 is a request to execute an algorithm on a given organization. When creating a task, you need to specify the following:

- The collaboration[, study] and organization identifiers.

- The algorithm to be executed.

- The input parameters for the algorithm.

The average algorithm we are going to use is the same as in Episode 3.

This algorithm has two functions: partial_average() and

central_average(). If you do not know the difference

between partial and central function, you should read

How

algorithms run in vantage6 (Episode 2) again.

We can use the client.task.create() method to create a

new task to be executed by the nodes.

OUTPUT

create(organizations: 'list', name: 'str', image: 'str', description: 'str', input_: 'dict', collaboration: 'int' = None, study: 'int' = None, store: 'int' = None, databases: 'list[dict]' = None) -> 'dict' method of vantage6.client.Task instance

Create a new task

Parameters

----------

organizations : list

Organization ids (within the collaboration) which need

to execute this task

name : str

Human readable name

image : str

Docker image name which contains the algorithm

description : str

Human readable description

input_ : dict

Algorithm input

collaboration : int, optional

ID of the collaboration to which this task belongs. Should be set if

the study is not set

study : int, optional

ID of the study to which this task belongs. Should be set if the

collaboration is not set

store : int, optional

ID of the algorithm store to retrieve the algorithm from

databases: list[dict], optional

Databases to be used at the node. Each dict should contain

at least a 'label' key. Additional keys are 'query' (if using

SQL/SPARQL databases), 'sheet_name' (if using Excel databases),

and 'preprocessing' information.

Returns

-------

dict

A dictionairy containing data on the created task, or a message

from the server if the task could not be createdLets start by defining the input for the task. The

partial_average() function requires a

column_name parameter. We can define the input as

follows:

Basically we are defining the method to be executed, the arguments

and keyword arguments for the method. In other words, we just created a

function call in Python that would look like this:

partial_average(column_name=age). If you are not familiar

with Python’s args and kwargs, you can read more about them at W3C

Schools: args

(w3schools.com) and kwargs

(w3schools.com).

Now that you have defined the task input, you can create and start it by also specifying (using the IDs we collected earlier) which organizations and for which collaboration, it will be executed:

Database parameter

We have not yet explained the databases parameter. This

parameter is used to specify the database that the nodes will use to

execute the algorithm. Each node can have multiple databases, and you

can specify them in the databases parameter. We will go

into more detail about this in Seting

up a vantage6 node (Episode 6).

Obtaining results

A client’s task execution request is asynchronous. This means that

once the client.task.create() method is invoked, the task

will begin running in the background, returning the control to the

Python program immediately (i.e., without waiting for the task to

complete).

This means that in case you want to use the task result in the remainder of your code, your program needs to wait until the task is completed, so you can get access to the results (or to the error details, if something goes wrong).

You can use the client.wait_for_result() method to make

the program execution wait until the task is completed. For that, you

need the ID of the task you just created, which was included in the

dictionary returned by the client.task.create() method. For

the task execution request of the code snippet above, this will look

like:

Aggregate results

The results contain the output of the algorithm. In the case of the

partial_average() function, the output is not yet

aggregated. This means that the output of each node is returned

separately. In the case of the central_average() function,

the output is aggregated and only the aggregated result is returned.

For now we can aggregate the results ourselfs:

Create a central task

In the previous section you created a task to run the

partial_average() function. Now, create a task to run the

central_average() function.

5. Run central method

In section Creating a new task it

is explained how to create a task to run the

partial_average() function. Now, create a task to run the

central_average() function. ⚠ Make sure to

only send the task to a single organization.

PYTHON

input_ = {

'method': 'central_average',

'args': [],

'kwargs': {'column_name': 'age'}

}

average_task = client.task.create(

organizations=[1],

study=1,

name="name_for_the_task",